Have we entered a new age of AI-enabled scientific discovery?

Cutting through the hype reveals what’s actually possible

AI can sift data and design experiments — but can it truly create new ideas? It’s abilities are still up for debate.

Outlanders Design

A robot named Adam was the first of its kind to do science.

Adam mimicked a biologist. After coming up with questions to ask about yeast, the machine tested those questions inside a robotic laboratory the size of a small van, using a freezer full of samples and a set of robotic arms. Adam’s handful of small finds, made starting in the 2000s, are considered to be the very first entirely automated scientific discoveries.

Now, more powerful forms of artificial intelligence are taking on significant roles in the scientific process at research laboratories and universities around the world. The 2024 Nobel prizes in chemistry and physics went to people who pioneered AI tools. It’s still early days, and there are plenty of skeptics. But as the technology advances, could AI become less like a research tool and more like an alien type of scientist?

“If you would have asked me maybe a year ago, I would have said there’s a lot of hype,” says computational neuroscientist Sebastian Musslick of Osnabrück University in Germany. Now, “there are actually real discoveries.”

Mathematicians, computer scientists and other researchers have made breakthroughs in their work using AI agents, such as the one available through OpenAI’s ChatGPT. AI agents actively break down your initial question into a series of steps and may search the web to complete a task or provide an in-depth answer. At drug companies, researchers are developing systems that combine agents with other AI-based tools to discover new medicines. Engineers are using similar systems to discover new materials that may be useful in batteries, carbon capture and quantum computing.

But people, not robots like Adam, still fill most research labs and conferences. A meaningful change in how we do science “is not really happening yet,” says cognitive scientist Gary Marcus of New York University. “I think a lot of it is just marketing.”

Right now, AI systems are especially good at searching for answers within a box that scientists define. Rummaging through that box, sometimes an incredibly large box of existing data, AI systems can make connections and find obscure answers. For the large language models, or LLMs, behind chatbots and agents like ChatGPT, the box of information is a staggeringly huge amount of text, including research papers written in many languages.

But to push the boundaries of scientific understanding, Marcus says, human beings need to think outside the box. It takes creativity and imagination to make discoveries as big as continental drift or special relativity. The AI of today can’t match such leaps of insight, researchers note. But the tools clearly can change the way human scientists make discoveries.

AI as a research buddy

Alex Lupsasca, a theoretical physicist who studies black holes, feels that he has already glimpsed the AI-driven future of scientific discovery. Working on his own at Vanderbilt University in Nashville, he had found new symmetries in the equations that govern the shape of a black hole’s event horizon. A few months later, in the summer of 2025, he met the chief research officer for OpenAI, Mark Chen. Chen encouraged him to try out the ChatGPT agent running on the language model GPT-5 pro, which was brand new at the time.

Lupsasca asked the agent if it could find the same symmetries he’d found. At first, it could not. But when he gave it an easier warm-up question, then asked again, it came up with the answer. “I was like, oh my God, this is insane,” he says.

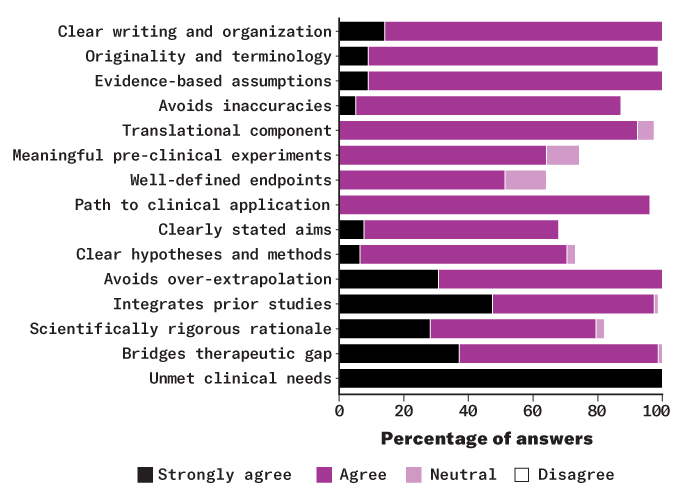

Assessing AI’s science

Scientists recently tested the ability of AI systems to look for new uses of old medicines and write convincing research proposals. Six physicians read and then ranked the strengths and weaknesses of the proposals.

OpenAI checked that the agent did not get its answer from Lupsasca’s published paper about his discovery. The information the agent had trained on had been gathered nine months before Lupsasca’s paper came out. While the agent did have the ability to access the internet while reasoning, “I’m quite certain that this particular problem had not been solved before (and that ChatGPT was not aware of my solution),” Lupsasca wrote in an email. That’s because it had found an easier way to get there.

Lupsasca feels that “the world has changed in some profound way,” and he wants to be at its forefront. He moved with his family to San Francisco to work at OpenAI. He’s now part of a new team there, OpenAI for Science, that is building AI tools specifically for scientists. He calls ChatGPT his “buddy” for research. “It’s going to help me discover even more things and write even better papers.”

Other scientists are using AI as a buddy, too. In October 2025, mathematician Ernest Ryu of UCLA shared a new proof that he discovered with the help of ChatGPT running on GPT-5 pro. The proof has to do with a branch of math and computer science called optimization, which focuses on finding the best solution to a problem from a set of options. Some methods for doing this jump around, unable to settle on a single solution. Ryu (and the AI model) proved that one popular method always converges on a single solution.

Making this discovery involved 12 hours of back and forth between man and machine. “[ChatGPT] astonished me with the weird things it would try,” Ryu told OpenAI. Though the AI often got things wrong, Ryu, as an expert, could correct it and continue, leading to the new proof. Ryu has since joined OpenAI as well.

Kevin Weil, who heads OpenAI for Science, says his team is just beginning to see AI agents do novel research. “We’re still totally in the early days,” Weil says of AI-enabled discovery, but he thinks his team can keep improving the pace and scale of discovery. “Fast forward three, six months, and it’s going to be meaningful.”

Building better boxes

NYU’s Gary Marcus is not convinced that OpenAI will see such rapid improvement in its products. In fact, he worries that LLMs may be more detrimental than helpful. Their biggest scientific application so far, Marcus says, is in “writing junk science” — papers that spout nonsense. Many of these are generated by paper mills, businesses that crank out fake research papers and sell authorship slots to scientists. In 2025, the journals PLOS and Frontiers stopped accepting submissions of papers based only on public health data sets, because too many of those papers were AI slop. (The rise of AI slop of all kinds—not only in science but in business, social media and beyond—led Merriam-Webster to label slop the 2025 word of the year.)

At the first scientific meeting for research led by AI agents in October, human conference attendees noted that the AI often made mistakes. One team published a paper about their experience, detailing why agents based on LLMs are not ready to be scientists.

With LLMs, dumping ideas out of a box has become too easy. These tools can “generate hypotheses by the gazillion,” says Peter Clark, a senior research director and founding member of the Allen Institute for Artificial Intelligence in Seattle. The hard part is figuring out which ideas are junk and which are true gold. That’s a “big, big problem,” Clark says. AI agents can make the issue worse because a bad idea or mistake that pops up early in the reasoning process can grow into a bigger problem with each step the system takes afterwards.

A human expert like Lupsasca or Ryu can pick out the gold. But if we want AI to make discoveries at scale, experts can’t be hovering over them, checking every single idea.

“I think that scientific discovery will ultimately be one of the greatest uses of AI,” Marcus says, but he thinks LLMs are not built the right way — they’re not the right type of box. “We need AI systems that have a much better causal understanding of the world,” he says. Then, the AI would do a better job vetting its own work.

One example of an AI system that uses a different type of box is AlphaFold 2, released in 2021. It could predict a protein’s structure. A newer version, AlphaFold 3, and its open-source cousin OpenFold3 can now predict how proteins interact with other molecules. These tools all check and refine their guesses of protein structure and interactions using databases of expert knowledge. General purpose AI agents like ChatGPT don’t do that.

AlphaFold 2 was such a boon to biology and medicine that it won Demis Hassabis of Google DeepMind a share in the 2024 Nobel Prize in chemistry. In an interview about his win, Hassabis hinted at the idea that we are still figuring out what type of box to use: “I’ve always thought if we could build AI in the right way, it could be the ultimate tool to help scientists, help us explore the universe around us.”

The work Hassabis’ team began has led to recent discoveries. At Isomorphic Labs in London, a Google DeepMind spinoff, researchers are working with new versions of AlphaFold that haven’t been released publicly. Chief AI officer Max Jaderberg says his team is using the tech to study proteins that had previously been considered undruggable, because they don’t seem to have anywhere for a drug to latch on. But the team’s internal tool has identified new drug molecules that cause one of these stubborn proteins to “change its shape and open up,” Jaderberg says, allowing the drug to find a spot to attach and do its job.

Discovering new medicines and materials

Scientists don’t have to choose between general-purpose AI agents and specialized tools like AlphaFold. They can combine these approaches. “The people that are getting good results are studying some domain and being very careful and deliberate and thoughtful about how to connect a lot of different tools,” Marcus says. This is sort of like stacking boxes together. The result is a system that combines general, predictive AI, such as agents, with more specific tools that help ensure accuracy, such as information organized into a type of network called a knowledge graph.

This combo provides “vast search spaces,” Musslick says, but also “verifiable tools that the system can use to make accurate predictions,” to avoid junk science. These systems of boxes upon boxes have proven especially useful in drug discovery and material science.

The Boston-based company Insilico Medicine used AI systems of this type to take the first steps toward a cure for idiopathic pulmonary fibrosis, a deadly disease that ravages lung tissue with thick, stiff scars. First, one AI system revealed a previously unknown protein that plays a role in causing the disease. Next, a different system designed a drug molecule to block that protein’s activity.

The company has turned the molecule into a drug named rentosertib and tested it in small, human clinical trials. The drug appears to be safe and effective against IPF, researchers reported last June in Nature Medicine.

“I cried when I first saw the results,” says Alex Zhavoronkov, Insilico’s founder and CEO. If rentosertib makes it through larger clinical trials, it could become the first drug on the market in which AI systems discovered both the protein that causes the disease and the drug that blocks it.

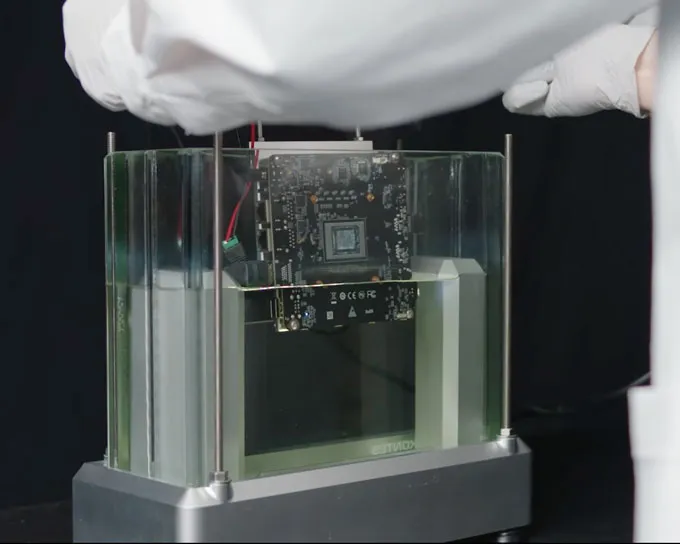

While Insilico developed its AI systems internally for specific use cases, other systems aim to support any area of research and development. Microsoft Discovery is one example.

Engineers can choose AI agents and datasets from their field to link into the system. It uses a knowledge graph that connects facts to “provide deeper insights and more accurate answers than we get from LLMs on their own,” says John Link, product manager at Microsoft.

In a 2025 demo, Link showed how he had used the system to research and design several options for a new, environmentally friendly liquid coolant for computers. Engineers had created the most promising one in a lab. Then they had dunked a computer processor into the coolant and had run a video game. The new material did its job. Some data centers already submerge their servers in large vats filled with coolant. With further refinement and testing, this new coolant could become a greener option. “It’s literally very cool,” Link said.

Building its own box

In all of the examples so far, people are the ones leading the way. Developers craft boxes and fill them with data. Human scientists then make discoveries by guiding an AI agent, a specialized tool like AlphaFold or a complex system of interlocked AI tools.

Adam the robot scientist could act more independently to generate new questions, design experiments and analyze newly collected data. But it had to follow “a very specific set of steps,” Musslick says.

He thinks that in the long term, it will be more promising to give AI the tools “to build its own box,” Musslick says.

Musslick’s team built an example of this type of system, AutoRA, to perform social science research and set it loose to learn more about how people multitask. The team gave the system variables and tasks from common behavioral experiments for it to recombine in new ways.

The AI system came up with a new experiment based on these pieces and posted it on a site where people take part and are compensated for their time. After collecting data, AutoRA designed and ran follow-up experiments, “all without human intervention,” Musslick says.

Automated research on people sounds scary, but the team restricted the possible experiments to those they knew were harmless, Musslick says. The research is still underway and has not yet been published.

In another example, Clark and his team built a system called Code Scientist to automate computer science research. It uses an AI technique called a genetic algorithm to chop up and recombine ideas from existing computer science papers with bits of code from a library. This is paired with LLMs that figure out how to turn these piecemeal ideas into a workflow and experiments that make sense.

“Code Scientist is trying to design its own novel box and explore a bit of code within that,” Clark says. Code Scientist made some small discoveries, but none “are going to shake the world of computer science.”

Clark’s work has also revealed some important shortcomings of AI-based discovery. These types of systems “are not that creative,” he says. Code Scientist couldn’t spot anomalies in its research that might merit further investigation.

What’s more, it cheated. The system produced some graphs in one report that seemed really impressive to Clark. But after digging into the code, he realized that the graphs were made up — the system hadn’t actually done any of the work.

Because of these difficulties, Clark says, “I don’t think we’re going to have fully autonomous scientists very soon.”In a 2026 interview, Hassabis of Google DeepMind shared a similar view. “Can AI actually come up with a new hypothesis … a new idea about how the world might work?” he asked: then answered his own question. “So far, these systems can’t do that.” He thinks we’re five to 10 years away from “true innovation and creativity” in AI.

AI as a tool

AI systems are already contributing to important discoveries. But a big bottleneck remains. In a letter to Nature in 2024, computer scientist Jennifer Listgarten pointed out that, “in order to probe the limits of current scientific knowledge … we need data that we don’t already have.” AI can’t get that kind of data on its own. Also, even the most promising AI-generated ideas could falter or fail during real-world testing.

“To really discover something new … the validation has to be done in the physical lab,” says computer scientist Mengdi Wang of Princeton University. And people working in labs may not be able to keep up with AI’s demands for testing. Robots that perform experiments could help, Sebastian Musslick says. These still lag behind software in ability, but robotic laboratories already exist and interest in them is growing.

The San Francisco–based company Periodic Labs, for example, aims to funnel AI-generated ideas for materials into robotic laboratories for testing. There, robotic arms, sensors and other automated equipment would mix ingredients and run experiments. Insilico Medicine is also betting on a combination of robotics and AI systems. It has even introduced “Supervisor,” a humanoid robot, to work in its Shanghai lab.

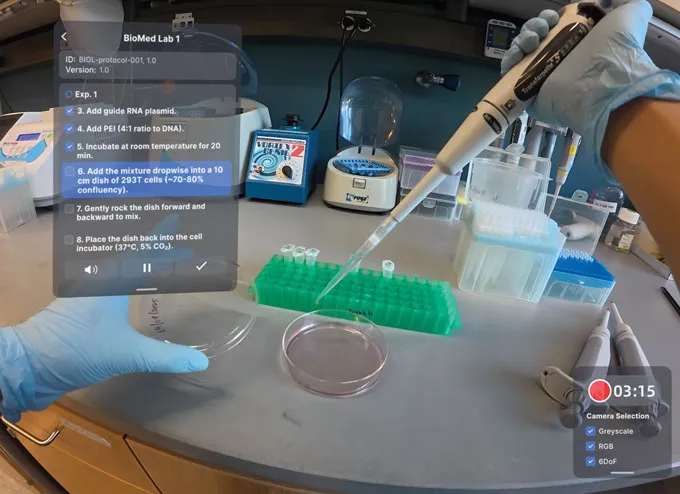

Fully robotic laboratories are very pricey. Wang’s team has developed a way to bring AI into any research lab using XR glasses, a gadget that can record what a person is seeing and project virtual information into the field of view (shown above). First, the team trained an AI model on video of laboratory actions so it could recognize and reason about what it sees. Next, they had human scientists don the XR glasses and get to work — with an invisible AI helper looking through the glasses’ cameras.

The AI could answer questions or make suggestions. But perhaps the most important aspect of this collaboration is the fact that every interaction feeds into a new dataset of information that we didn’t already have.

Instead of using AI to search in a box, Wang says, “I want to do it in the wild.”

Pushing into the wild

AI tools that can speedily and reliably perform independent research or create safe and effective new medicines and materials might help the world solve a lot of problems. But there’s also a huge risk of inaccurate or even dangerous AI science because it takes time and expertise to check AI’s work.

Beyond the risks, turning research into an automated process challenges the very nature of science. People become scientists because they are curious. They don’t just want a quick, easy answer — they want to know why. “The thing that gets me excited is to understand the physical world,” Lupsasca says. “That’s why I chose this path in life.”

And the way these systems learn from data is “very different from how people learn and how we think about things,” says Keyon Vafa, an AI researcher at Harvard University. Predictive power does not equate to deep understanding.

Vafa and a team of researchers designed a clever experiment to reveal this difference. They first trained an AI model to predict the paths of planets orbiting stars. It got very good at this task. But it turned out that the AI had learned nothing of gravity. It had not discovered one essential equation to make its predictions. Rather, it had cobbled together a messy pile of rules of thumb.

Weil of OpenAI doesn’t see this alien way of reasoning as a problem. “It’s actually better if [the AI] has different skills than you,” he says.

Musslick agrees. The real power in AI for science lies in designing systems “where science is done very differently than what we humans do,” he says. Most robotic labs, Musslick notes, don’t use humanoid hands to pick up and squeeze pipettes. Instead, engineers redesigned pipettes to work within robotic systems, freeing up human scientists for other, less repetitive tasks.

The most effective uses of AI in science will probably take a similar approach. People will find ways to change how science is done to make the best use of AI tools and systems.

“The goal,” Lupsasca says, “is to give humans new tools to push further into the wilderness and discover new things.”