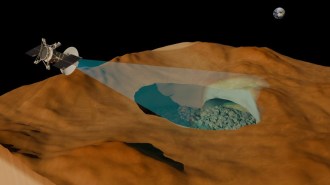

NASA’s DART spacecraft changed an asteroid’s orbit around the sun

Studying this asteroid could help protect Earth from future asteroid strikes

NASA’s DART spacecraft took this picture of asteroid Didymos (bottom left) and its smaller companion Dimorphos about 2.5 minutes before deliberately crashing into Dimorphos. Scientists have now shown that the impact changed the asteroid duo’s orbit around the sun.

NASA/Johns Hopkins APL