The Digital Camera Revolution

Instead of imitating film counterparts, new technologies work with light in creative ways

Take a grainy, blurred image of a formless face or an illegible license plate, and with a few keystrokes the picture sharpens and the killer is caught — if you’re a crime-scene tech on TV. From Harrison Ford in Blade Runner to CSI, Criminal Minds and NCIS, the zoom-and-enhance maneuver has become such a staple of Hollywood dramas that it’s mocked with video montages on YouTube.

In real life, of course, no amount of high-techery can disclose data not captured by a camera in the first place. But scientific advances are now gaining ground on fictional forensics. The field known as computational photography has exploded in the last decade, yielding powerful new cameras capable of tricks once seen only in the labs of make-believe.

For a long time camera makers and operators focused mostly on getting more pixels. But the “pixel war” is over, says Marc Levoy, a pioneer in computational photography at Stanford University. Today’s manufacturers are looking beyond good resolution.

Low-cost computing and new algorithms, combined with fancy optics and sensors, are drastically changing how cameras re-create the world. Scientists have recently devised a camera that could spot a culprit by peeking around corners; another might divulge the identity of an attacker by collecting information reflected in a victim’s eyes. Other developments, some of which are making their way into commercially available cameras and smartphones, won’t necessarily help snag a bad guy but can turn anyone with a camera into a photo-grapher extraordinaire.

Researchers are, for example, finding ways to clean up pictures so that smudges or window screens disappear. The addition of unconventional lenses means pictures can be refocused long after a shot is taken. And the “Frankencamera,” recently developed at Stanford, is designed to be programmable, so that users can play around with the hardware and the computer code behind it. Such work may lead to previously impossible photos, researchers say — images that have yet to be imagined.

“The possibilities are not readily apparent at first,” write MIT’s Ramesh Raskar and Jack Tumblin of Northwestern University in Evanston, Ill., in a comprehensive textbook on computational photography set to be published this year. “Like a long-caged animal in a zoo destroyed by a hurricane, those of us who grew up with film photography are still standing here in shocked astonishment at the changes.”

Caught on camera

Until a few years ago, most digital cameras were basically film cameras, just with an electronic sensor doing the job of the film. These “filmlike” cameras use a lens to capture light from a 3-D scene, faithfully re-creating it as a 2-D image.

But in a digital camera, there’s no need for that re-creation to be faithful. Digital cameras have a tiny computer that processes incoming optical information before it is stored on the memory card. That computer can transform the scene, measuring, manipulating and combining visual signals in fundamentally new ways. With the help of tricked-out optics — such as multiple lenses in different arrangements — photographers can not only perfect the traditional recording of their lives, but they can also manipulate those keepsake shots to get something strange and different.

Advances in math and optics are now developing hand in hand, says Shree Nayar, head of the Computer Vision Laboratory at Columbia University. “When you worry about both of them at the same time, you can do new and interesting things.”

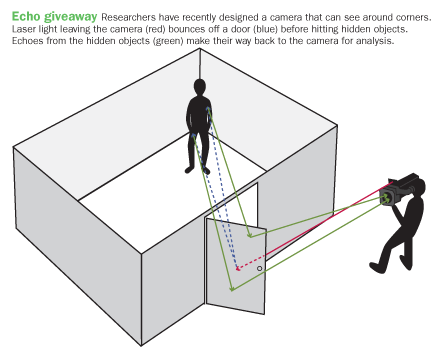

One new and interesting thing is the ability to look around corners, beyond the line of sight. Developed in 2009 by Raskar, MIT graduate student Ahmed Kirmani and colleagues at MIT and the University of California, Santa Cruz, a new camera with a titanium-sapphire laser for a flash shoots brilliant light in pulses lasting less than a trillionth of a second. After the light ricochets off objects, including those not visible to the photographer, the camera collects the returning “echoes.” The camera then analyzes the photons that return and can estimate shapes blocked by a wall or other obstruction.

The technology might lead to devices that allow drivers to see around blind corners or surgeons to get a better view in tight places. It could also help first responders plan rescues in dangerous situations and crime fighters spot hidden foes.

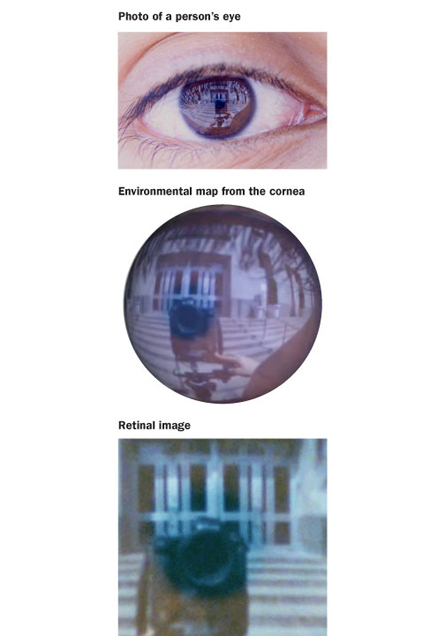

Another technology that might aid real-world sleuths is the “world in an eye” imaging system, which can re-create a person’s surroundings from information reflected in a single eye. Using a geometric model of the eye’s cornea, Nayar and colleague Ko Nishino, now at Drexel University in Philadelphia, created a camera that detects where the cornea and the white of the eye meet. Computations then turn the cornea’s reflection of a fish bowl–like image into a map of the environmental surroundings projected on the person’s retina.

Using information on the tilt of the camera and the person’s eye positioning, whatever the person is looking at can be pinpointed, making the technology useful for eye-tracking studies where researchers want to know what a participant is paying attention to. The technology (which is available as a software package from the Computer Vision Laboratory) is also helping people look into the past. One photographer has been assessing reflections in the eyes of old photographs, exposing a blurred scene reflected in the eye of an old man in an 1840 portrait.

Picture perfect

If just capturing precious moments is more your style, many researchers, Nayar included, are exploring ways to enhance pictures taken for the more traditional purpose of archiving one’s life. There are methods for getting around that annoying shutter delay that makes you miss your shot, for deblurring moving objects and even for erasing raindrops that obscure what a picture was meant to capture.

Such tricks are gradually making their way into commercial cameras, or being made available as downloadable apps for use with smartphones. One new camera dubbed Lytro, developed by Ren Ng for his dissertation at Stanford, can readjust the focus post-shoot, so a picture can clearly render what’s nearby or far away.

Lytro’s trick is it that it employs “radically different optics,” says Stanford’s Levoy, who worked on those optics with Ng.

In between the main lens and the sensor, Lytro has an array of tiny lenses called lenslets that capture an entire light field — the intensity, color and direction of every ray of incoming light (in this case, that’s 11 million rays). Whereas a traditional camera captures some of the light leaving any one point in a scene and focuses it back together on a single pixel on a sensor, the lenslets distribute the light so it is recorded in separate pixels. This spread of information across pixels is encoded in the image, making refocusing later possible.

Lytro became commercially available last year, and another light-field camera may soon be available in smartphones. Last February Pelican Imaging announced a prototype for mobile devices that has an array of 25 lenslets. Like Lytro, Pelican promises images that can be refocused. But unlike Lytro’s boxy shape, this version would fit in the slender confines of a cell phone.

Arrays of full cameras (not just the lenses) also allow for interesting manipulations. When packed close together, the cameras approximate a giant lens, which means much more light is available for manipulating. Photos can thus be created with a shallow depth of field so that the photo’s subject is nice and crisp and the background is blurred, freeing the image from distracting clutter. A giant lens also means that a photographer can capture enough light from different angles to blur out foreground objects like foliage or venetian blinds, in effect looking around them. One of Stanford’s large-camera arrays has 128 video cameras set up 2 inches apart. The arrangement is like having a camera with a 3-foot-wide aperture.

Tweaks to a camera’s back end are also improving documentary potential. Image sensors have become much better at capturing light, so cameras can take many more pictures per second. A high frame rate combined with complex math means the camera can snap many versions of the same picture at different exposures and then merge them for the best results or select the best of the single images, a trick known as high dynamic range imaging.

New cameras can also deal with shutter lag. When set in a particular mode, the camera begins taking a burst of photos and temporarily saves them. The photographer gets the typical shot (the one taken when the shutter is clicked) as well as a series of shots from before and after.

“It’s something I’ve always wanted in a camera — for it to start taking pictures before something interesting happens,” says Tumblin. “So when your daughter is blowing out her birthday candles, you have a sequence of shots, one right after the other.”

Made to order

It’s all well and good that camera manufacturers are getting around to incorporating such advances, Levoy says. But he has higher hopes — that consumer cameras will one day be programmable, giving users the power to get exactly what they want out of the device.

“I came out of computer graphics where anyone can play around,” Levoy says. “The camera industry is not like that. It’s very secretive.”

While every digital camera has a computer inside, it’s usually locked in a black box. You can’t get in there and program it. Several hacking tools exist for liberating the code of particular cameras, but Levoy and his colleagues wanted to play around with settings without resorting to such measures. So Levoy and colleagues built the programmable Frankencamera.

Dealing with commercially available cameras “was just a painful experience,” says Andrew Adams, who worked with Levoy and is now at MIT. “So after getting sufficiently frustrated at the programming that exists, we decided to make our own camera.”

The Frankencamera started out as a clunky black thing built with off-the-shelf components (hence the “Franken”). But in the spirit of computer science, the camera is easy to program, running on Linux-based software. With a little effort, the camera can be made to, say, use gyroscope data to determine if it is moving when a picture is taken. If so, it can select the sharpest photo from a bunch that are taken, an application Adams calls “lucky imaging.”

Nokia was interested enough in the Frankencamera to help researchers make their computer code compatible with the Nokia N900. The researchers began using the N900 in the classroom and have been shipping it around the world to other academics in the field of computational photography.

“The first assignment was to replace the autofocus algorithm,” says Adams. “It was so cool; we gave them a week and they came up with better things than Nokia.”

One student took several pictures over circular objects from above and programmed the camera to average the pictures together, yielding an image that normally could be captured only with a much larger lens, says Adams. Several other manipulations have been explored, such as panoramic stitching, high dynamic range imaging and flash/no-flash imaging, which combines shots taken with and without a flash to create a photograph that displays the best of both. The Frankencamera team released its code in 2010, so anyone can add these capabilities to the Nokia N900.

The camera has also been set up for “rephotography,” the retaking of a previously taken photo, historic or otherwise. The camera looks for distinguishing features in a scene, such as corners, and directs the photographer with arrows to align the camera precisely, creating a second version of the original picture but in a new season or new time in history.

With all the new souped-up cameras rolling out, the dangers of shaky hands or poor lighting are rapidly becoming concerns of the past. And the ability to make a picture bizarre, or shocking, is now available to anyone with the right smartphone and app. But once Frankencameras and similar build-your-own devices are in the hands of enough people, the creative possibilities balloon. You name it, programmers will find a way to do it.

“There’s a catchphrase,” Adams says: “Computation is the new optics.”