Enriched with Information

New theory doesn’t limit consciousness to the brain

Demystifying the Mind

This feature is the final installment in a three-part series on the scientific struggle to explain consciousness. To read the entire series, click here.

But Tononi’s most profound insight didn’t spring from this huge cache of scientific data. It came instead from a moment of quiet reflection. When he stepped away from his scanners and data and the hustle of the lab and thought — deeply — about what it was like to be conscious, he realized something: Each split second of awareness is a unified, holistic experience, completely different from any experience before or after it.

From that observation alone, Tononi intuited a powerful new theory of consciousness, a theory based on the flow of information. He and others believe that mathematics — in particular, a set of equations describing how bits of data move through the brain — is the key to explaining how the mind knits together an experience.

Because of its clarity, this informational intuition has resonated with other researchers, inspiring a new way to see the consciousness problem. “This insight was very important to me,” says Anil Seth of the Sackler Centre for Consciousness Science at the University of Sussex in Brighton, England. “I thought, there’s something right about all this.”

So far, the new equations exist only as prototypes, like model airplanes that can’t fly but still help clarify how jumbo jets stay aloft. But researchers believe that these prototypes may one day lead to a tool that can measure consciousness, even when signs of it are ambiguous. Already, researchers are testing the math that would underpin such a tool in human brains as people lose awareness.

Tononi’s idea, though, extends beyond humans. By moving from nerve cells to the math that describes them, he has untethered the theory of consciousness from the physical brain. Like amorphous Silly Putty, the equations can be molded to fit any system. With the right calculations, scientists could test whether a tornado with its innumerable dust particles circling in unison, 2050’s iPhone or the trillions of megabytes of information zooming around the Internet could have some degree of consciousness.

In the same way that a thermometer made plain the concept of temperature (for a boiling pot of water or a person’s body), a consciousness yardstick could ultimately lead to a better understanding of the substance of consciousness itself.

Because Tononi’s theory focuses on the very essence of awareness, neuroscientist Christof Koch believes that it is “the only true theory of consciousness.”

Scientists have amassed an impressive list of the brain changes that occur when consciousness comes or goes, Koch says, but such a list can’t provide a full explanation of the mysterious process from which conscious experience emerges. “Why is it in this area and not that area? What is it about this area, or this brain, or these neurons that give rise to conscious sensation?” says Koch, of Caltech and the Allen Institute for Brain Science in Seattle. What’s needed is an answer to the all-important “why?” “The only theory that does that in a fundamental way is Tononi’s.”

All about integration

Tononi’s theory defines consciousness as the capacity of a system — any system — to connect and use information. The idea rests on two simple observations: First, a single moment of human experience is one of the most information-heavy things in the universe, an observation so simple that it’s often overlooked, Tononi says.

People usually talk about information as something gained: Infomercials entreat viewers to call for more information, criminal investors acquire insider information and spies gather clandestine information. But technically, information has more to do with what’s lost. In its professional job description, it is a measure of how much uncertainty has been whittled away.

Before it’s locked in, any single moment of existence could play out in a nearly limitless number of ways. But the instant an experience gels, the options vanish. Simply existing — getting out of bed on a Sunday morning, watching a touchdown during an afternoon football game or just staring off into space — rules out all alternatives.

Even the brain of a person in a chair in absolute darkness is an information gold mine. “When you see pure darkness, it’s a particular scene that differs from trillions of other scenes in a particular way,” Tononi says. “And therefore it becomes super meaningful.”

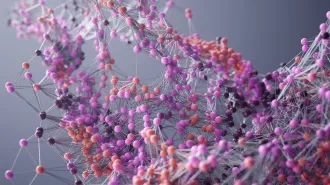

For tribes of specialized nerve cells in the human brain, no subtle distinction is too small: Groups of neurons form cottage industries that can tell cobalt from royal blue, remember your high school lab partner’s name and assess whether the Giants receiver stepped out-of-bounds as he made his catch.

But it’s not enough for a system to have vast stores of information stashed around the brain in isolation. Those diverse specialists must all talk to one another, the second principle of Tononi’s theory. Integration is what makes every conscious experience a unified whole. “Every experience is what it is. You cannot break it into independent pieces,” Tononi says. “You cannot experience the right side independent of the left side, the color without the shape.”

Together, these two concepts — information and integration — describe what consciousness actually feels like, Seth says. “The relevant brain mechanisms are somehow bound together, but somehow doing their own thing, so you get the richness of the experience,” he says. A beehive’s forager bees, nurse bees and queen all have specialized abilities and knowledge but are required to work together to make the hive buzz along. A similar thing may be going on in consciousness.

Tononi measures the combination of these two properties, information and integration, with a numerical value that he calls phi. This single number represents the information that exists above and beyond that stored by all the individual pieces of a system. For the human brain, with its vast constellations of nerve cell connections and huge repertoire of states, calculating an exact phi is impossible; you’d have to add up all the useful data that’s free to flow among all the links in the system and subtract what’s held by each subsystem alone.

But even without an exact value for phi, scientists can still get a rough idea of what integrated information — or its absence — would look like in the human brain.

Finding phi

If integration is crucial to consciousness, then altered connections in a person’s brain should mean altered awareness. One of the brain’s most well-traveled connections is the corpus callosum, an information superhighway made of 200 million fibers shuttling information between the two brain hemispheres. In some severe cases of epilepsy, surgeons cut this link in an attempt to stop seizure signals from moving across it.

Such split-brain patients were studied by Roger Sperry and Michael Gazzaniga at Caltech in the early 1960s. Gazzaniga and Sperry found that with a severed corpus callosum, people essentially had two conscious experiences — one for the left side of space and one for the right. Without this connector, for instance, a person can’t easily name an object in the left side of space, because the right hemisphere, which identifies the object, can’t tell the speech center in the left hemisphere what it sees.

“We know experimentally that there are two conscious minds in this one skull,” Koch says. “One skull, two conscious minds.”

But linking everything to everything else doesn’t do the trick, either. When people are unconscious in the throes of a seizure, nerve cell behavior is extremely coordinated, like millions of soldiers marching in lockstep. The system is highly integrated, but it loses all diversity. Specialized coalitions of nerve cells, like those that distinguish shades of blue, are stripped of their roles. Instead of possessing richly varied information, the cells are either all on or all off.

So far, the best evidence for Tononi’s theory comes from experiments he and others have performed on people in various states of consciousness, such as in a deep sleep, anesthetized or in a vegetative state. In awake people, a jolt of current delivered to one spot on the brain through a technique called transcranial magnetic stimulation, or TMS, moves around, traveling and morphing and shifting for about 300 milliseconds. Yet in people who are in states of diminished consciousness, this TMS signal peters out quickly. Tononi and his colleagues think this signal represents the brain’s ability to integrate information, and they are working on ways to convert the signal into an estimate that might capture some scrap of a full-fledged phi.

Seth, of the Sackler Centre, is pursuing a different way of measuring integrated information in the complex interactions among brain cells. Instead of assessing consciousness as a static capacity at any given moment, his method treats consciousness as a property that changes continuously. “For me, consciousness is a process, the properties of a system’s dynamics as it unfolds over time,” he says.

Because Seth’s measure, called causal density, relies on some starting assumptions and comes up with an average instead of an exact value at a single point in time, it is easier to calculate than phi.

In a study reported online in January in PLoS ONE, Seth’s team managed to make some measure of causal density in human brains. Seven healthy volunteers lost consciousness after they were anesthetized with the drug propofol. Researchers monitored electrical signals in the brain in two particular brain regions known to change behavior as anesthesia takes hold, the anterior and posterior cingulate cortices.

Unexpectedly, when consciousness slipped away, causal density scores went up for these two regions. The local increases could be accompanied by an overall decrease of information integration, Seth says.

Animat insight

Though experiments probing the information structure of the human brain are still in their early stages, mathematical simulations have shown that integrated information can in fact be measured in other systems. Tononi and his colleagues devised a system so simple that its phi can be calculated — a simulated animal called an animat. Relying on sensors that detected the environment, actuators that allowed it to move and places to store data as it learned, this animat worked its way through a computer maze. The animat also possessed an ability that most living organisms take for granted: It could gradually evolve over 50,000 generations of maze running.

At the start, the animat had a hard time navigating. But around generation 14,000, it got good. Along with this performance boost, the animat’s phi, the amount of information successfully shuttled among its constituent parts, went up. Different bits learned to communicate. By generation 49,000, the animat whizzed through the maze with its high phi, Tononi and colleagues reported in October in PLoS Computational Biology.

The animat is a simple case. Even at its highest, its phi wouldn’t compare to that of a person. But this link between high phi and performance might reflect a deeper truth about why consciousness has evolved. With integrated information, the little critter can figure out things about its environment and predict what’s coming.

Predictive powers may be one of the prime reasons consciousness exists among humans. “If you see a table and a computer monitor in front of you, you know why it’s there and what it means to you, but also what you can do with it and what might happen next,” Tononi says. Each experience calls to mind loads of different associations, memories and thoughts. “All of this, of course, is part and parcel of why consciousness is adaptive, why it is evolutionarily something that makes a lot of sense. It allows us to understand the past and predict the future.”

Consciousness expands

As the example of the animat suggests, integrated information may not be restricted to just objects in possession of a brain. Such a simple finding has a big implication: If Tononi is right, and integrated information actually is consciousness, then consciousness itself is no longer restricted to the inside of a head. As long as it has the right informational specs, any system, whether it’s made of nerve cells, silicon chips or light beams, could possess consciousness.

Such a realization alters the consciousness conversation. In a world full of objects that can move information around quickly — an octopus’s brain, a tree’s root system, the Internet — the discussion of whether an entity is conscious may lose its meaning. Instead, the question becomes, “How conscious is it?” Small systems with just a few bits of information may have a tiny sliver of consciousness, while large systems such as human brains have a whopping helping.

Expanding the umbrella of consciousness to include systems that don’t have brains, a view echoing that espoused by some ancient religions and more modern versions of panpsychism, is an uncomfortable stretch for many researchers. Seth, for one, believes the mathematical language of consciousness offers interesting descriptions but stops short of saying that integrated information is actually the thing itself. “The only systems that we know of in the universe that generate consciousness are biological,” he says.

Others have more unorthodox ideas. Koch says he might be wrong, but he believes that consciousness, like an electron’s charge, is something inherent in the fabric of reality that gives shape, structure and meaning to the world. “Consciousness is not an emergent feature of the universe,” he says. “It’s a fundamental property.”

Whether consciousness is woven into the very nature of the cosmos is a grand question that, for now, remains unanswerable, Koch says. But that hasn’t hindered real progress on other questions about the mind. Systematic studies and leaps of insight have revealed what’s required of a conscious brain, traced a particular sight as it journeys into awareness and produced a crop of theories that hint at the true nature of consciousness.

“We make progress by looking where the light is bright,” Koch says. “But ultimately, we have to look farther and deeper.”

Guided by theoretical insights, scientists may someday escape the confusion that comes from being their own subjects, and see clearly into the minds of man. An understanding of what lies within the skull may then inspire scientists to shift their gazes outward, where an entirely new and mysterious world awaits.

Consciousness, in theory

While a new theory of awareness relies on general mathematical principles, many existing ideas (some below) build on features of the human brain.

Global workspace First proposed by Bernard Baars of the Neurosciences Institute in La Jolla, Calif., this theory posits that consciousness emerges when information residing in a centrally accessible brain system is broadcast to other parts of the brain.

Neural Darwinism Large and diverse cohorts of nerve cells compete with each other, getting stronger or weaker in response to selective constraints, Nobel laureate Gerald Edelman first proposed in 1978. This flux generates consciousness as the mind becomes better able to respond to its environment.

Convergent/divergent zones Antonio Damasio of the University of Southern California suggests that brain regions that send and receive information help the mind form memories, emotions and other mental imagery. Damasio also thinks that consciousness requires a sense of self.

Intermediate level Proposed by Ray Jackendoff of Tufts University and championed by Jesse Prinz of the City University of New York, this theory says that data must be handled at intermediate brain levels in order to reach consciousness. Only info that’s neither too specific nor too abstract reaches awareness.