Mind to motion

Brain-computer interfaces promise new freedom for the paralyzed and immobile

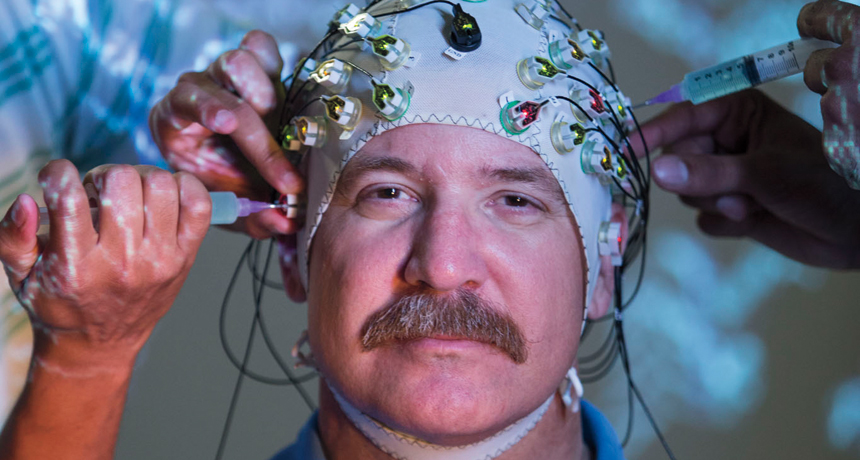

WALKING WITH ROBOTS Spinal cord–injury patient Gene Alford takes a supported step in NeuroRex, a thought-controlled robotic walking device. An electrode cap detects electrical signals from his brain that, decoded by a computer, tell the device to move.

Felix Sanchez