Can fake faces make AI training more ethical?

Synthetic images may offer hope for training private, fair face recognition

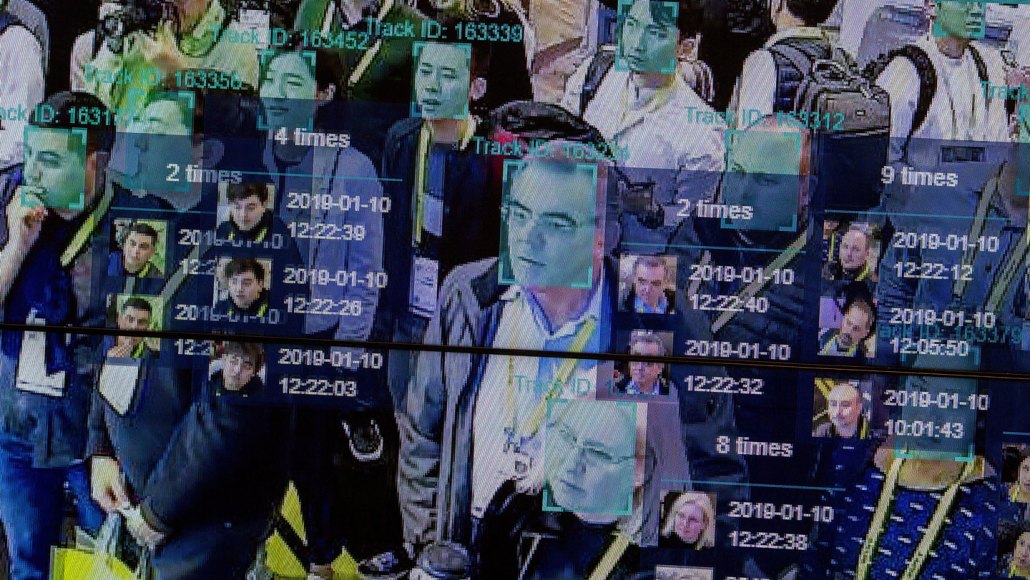

To achieve high accuracy, facial recognition models must be trained on millions of photos of people’s faces.

DAVID MCNEW/Contributor/Getty Images

AI has long been guilty of systematic errors that discriminate against certain demographic groups. Facial recognition was once one of the worst offenders.

For white men, it was extremely accurate. For others, the error rates could be 100 times as high. That bias has real consequences — ranging from being locked out of a cell phone to wrongful arrests based on faulty facial recognition matches.

Within the past few years, that accuracy gap has dramatically narrowed. “In close range, facial recognition systems are almost quite perfect,” says Xiaoming Liu, a computer scientist at Michigan State University in East Lansing. The best algorithms now can reach nearly 99.9 percent accuracy across skin tones, ages and genders.

But high accuracy has a steep cost: individual privacy. Corporations and research institutions have swept up the faces of millions of people from the internet to train facial recognition models, often without their consent. Not only are the data stolen, but this practice also potentially opens doors for identity theft or oversteps in surveillance.

To solve the privacy issues, a surprising proposal is gaining momentum: using synthetic faces to train the algorithms.

These computer-generated images look real but do not belong to any actual people. The approach is in its early stages; models trained on these “deepfakes” are still less accurate than those trained on real-world faces. But some researchers are optimistic that as generative AI tools improve, synthetic data will protect personal data while maintaining fairness and accuracy across all groups.

“Every person, irrespective of their skin color or their gender or their age, should have an equal chance of being correctly recognized,” says Ketan Kotwal, a computer scientist at the Idiap Research Institute in Martigny, Switzerland.

How artificial intelligence identifies faces

Advanced facial recognition first became possible in the 2010s, thanks to a new type of deep learning architecture called a convolutional neural network. CNNs process images through many sequential layers of mathematical operations. Early layers respond to simple patterns such as edges and curves. Later layers combine those outputs into more complex features, such as the shapes of eyes, noses and mouths.

In modern face recognition systems, a face is first detected in an image, then rotated, centered and resized to a standard position. The CNN then glides over the face, picks out its distinctive patterns and condenses them into a vector — a list-like collection of numbers — called a template. This template can contain hundreds of numbers and “is basically your Social Security number,” Liu says.

To do all of this, the CNN is first trained on millions of photos showing the same individuals under varying conditions — different lighting, angles, distance or accessories — and labeled with their identity. Because the CNN is told exactly who appears in each photo, it learns to position templates of the same person close together in its mathematical “space” and push those of different people farther apart.

This representation forms the basis for the two main types of facial recognition algorithms. There’s “one-to-one”: Are you who you say you are? The system checks your face against a stored photo, like when unlocking a smartphone or going through passport control. The other is “one-to-many”: Who are you? The system searches for your face in a large database to find a match.

But it didn’t take researchers long to realize these algorithms don’t work equally well for everyone.

Why fairness in facial recognition has been elusive

A 2018 study was the first to drop the bombshell: In commercial facial classification algorithms, the darker a person’s skin, the more errors arose. Even famous Black women were classified as men, including Michelle Obama by Microsoft and Oprah Winfrey by Amazon.

Facial classification is a little different than facial recognition. Classification means assigning a face to a category, such as male or female, rather than confirming identity. But experts noted that the core challenge in classification and recognition is the same. In both cases, the algorithm must extract and interpret facial features. More frequent failures for certain groups suggest algorithmic bias.

In 2019, the National Institute of Science and Technology offered further confirmation. After evaluating nearly 200 commercial algorithms, NIST found that one-to-one matching algorithms had just a tenth to a hundredth of the accuracy in identifying Asian and Black faces compared with white faces, and several one-to-many algorithms produced more false positives for Black women.

The errors these tests point out can have serious, real-world consequences. There have been at least eight instances of wrongful arrests due to facial recognition. Seven of them were Black men.

Bias in facial recognition models is “inherently a data problem,” says Anubhav Jain, a computer scientist at New York University. Early training datasets often contained far more white men than other demographic groups. As a result, the models became better at distinguishing between white, male faces compared with others.

Today, balancing out the datasets, advances in computing power and smarter loss functions — a training step that helps algorithms learn better — have helped push facial recognition to near perfection. NIST continues to benchmark systems through monthly tests, where hundreds of companies voluntarily submit their algorithms, including ones used in places like airports. Since 2018, error rates have dropped over 90 percent, and nearly all algorithms boast over 99 percent accuracy in controlled settings.

In turn, demographic bias is no longer a fundamental algorithmic issue, Liu says. “When the overall performance gets to 99.9 percent, there’s almost no difference among different groups, because every demographic group can be classified really well.”

While that seems like a good thing, there is a catch.

Could fake faces solve privacy concerns?

After the 2018 study on algorithms mistaking dark-skinned women for men, IBM released a dataset called Diversity in Faces. The dataset was filled with more than 1 million images annotated with people’s race, gender and other attributes. It was an attempt to create the type of large, balanced training dataset that its algorithms were criticized for lacking.

But the images were scraped from the photo-sharing website Flickr without asking the image owners, triggering a huge backlash. And IBM is far from alone. Another big vendor used by law enforcement, Clearview AI, is estimated to have gathered over 60 billion images from places like Instagram and Facebook without consent.

These practices have ignited another set of debates on how to ethically collect data for facial recognition. Biometric databases pose huge privacy risks, Jain says. “These images can be used fraudulently or maliciously,” such as for identity theft or surveillance.

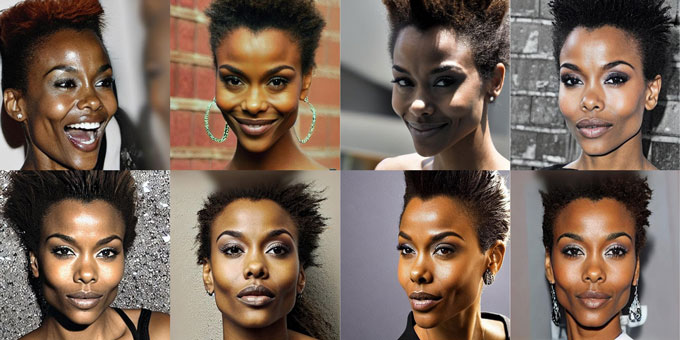

One potential fix? Fake faces. By using the same technology behind deepfakes, a growing number of researchers think they can create the type and quantity of fake identities needed to train models. Assuming the algorithm doesn’t accidentally spit out a real face, “there’s no problem with privacy,” says Pavel Korshunov, a computer scientist also at the Idiap Research Institute.

Creating the synthetic datasets requires two steps. First, generate a unique fake face. Then, make variations of that face under different angles, lighting or with accessories. Though the generators that do this still need to be trained on thousands of real images, they require far fewer than the millions needed to train a recognition model directly.

Now, the challenge is to get models trained with synthetic data to be highly accurate for everyone. A study submitted July 28 to arXiv.org reports that models trained with demographically balanced synthetic datasets were better at reducing bias across racial groups than models trained on real datasets of the same size.

In the study, Korshunov, Kotwal and colleagues used two text-to-image models to each generate about 10,000 synthetic faces with balanced demographic representation. They also randomly selected 10,000 real faces from a dataset called WebFace. Facial recognition models were individually trained on the three sets.

When tested on African, Asian, Caucasian and Indian faces, the WebFace-trained model achieved an average accuracy of 85 percent but showed bias: It was 90 percent accurate for Caucasian faces and only 81 percent for African faces. This disparity probably stems from WebFace’s overrepresentation of Caucasian faces, Korshunov says, a sampling issue that often plagues real-world datasets that aren’t purposefully trying to be balanced.

Though one of the models trained on synthetic faces had a lower average accuracy of 75 percent, it had only a third of the variability of the WebFace model between the four demographic groups. That means that even though overall accuracy dropped, the model’s performance was far more consistent regardless of race.

This drop in accuracy is currently the biggest hurdle for using synthetic data to train facial recognition algorithms. It comes down to two main reasons. The first is a limit in how many unique identities a generator can produce. The second is that most generators tend to generate pretty, studio-like pictures that don’t reflect the messy variety of real-world images, such as faces obscured by shadows.

To push accuracy higher, researchers plan to explore a hybrid approach next: Using synthetic data to teach a model the facial features and variations common to different demographic groups, then fine-tuning that model with real-world data obtained with consent.

The field is advancing quickly — the first proposals to use synthetic data for training facial recognition models emerged only in 2023. Still, given the rapid improvements in image generators since then, Korshunov says he’s eager to see just how far synthetic data can go.

But accuracy in facial recognition can be a double-edged sword. If inaccurate, the algorithm itself causes harm. If accurate, human error can still come from overreliance on the system. And civil rights advocates warn that too-accurate facial recognition technologies could indefinitely track us across time and space.

Academic researchers acknowledge this tricky balance but see the outcome differently. “If you use a less accurate system, you are likely to track the wrong people,” Kotwal says. “So if you want to have a system, let’s have a correct, highly accurate one.”