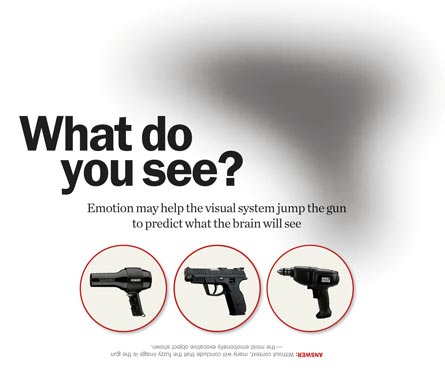

What do you see?

Emotion may help the visual system jump the gun to predict what the brain will see

You are hiking in the mountains when, out of the corner of your eye, you see something suspiciously snakelike. You freeze and look more carefully, this time identifying the source of your terror: a stick.

Yet you could have sworn it was a snake.

The brain may play tricks, but in this case it was actually doing you a favor. The context — a mountain trail — was right for a snake. So your brain was primed to see one. And the stick was sufficiently snakelike to make your brain jump to a visual conclusion.

But it turns out emotions are involved here, too. A fear of snakes means that given an overwhelming number of items to look at — rocks, shrubs, a hiking buddy — “snake” would take precedence.

Studies show that the brain guesses the identity of objects before it has finished processing all the sensory information collected by the eyes. And now there is evidence that how you feel may play a part in this guessing game. A number of recent studies show that these two phenomena — the formation of an expectation about what one will see based on context and the visual precedence that emotions give to certain objects — may be related. In fact, they may be inseparable.

New evidence suggests that the brain uses “affect” (pronounced AFF-ect) — a concept researchers use to talk about emotion in a cleaner, more clearly defined way — not only to tell whether an object is important enough to merit further attention, but also to see that object in the first place.

“The idea here is not that if we both see someone smile we would interpret it differently,” says Lisa Feldman Barrett

of Boston College. “It’s that you might see the smile and I might completely miss it.” Barrett and Moshe Bar of Harvard Medical School and Massachusetts General Hospital in Boston outline this rather unexpected idea in the May 12 Philosophical Transactions of the Royal Society B: Biological Sciences.

Work connecting emotion to visual processing could have an impact on how scientists understand mental disorders and even personality differences, Barrett says. Some researchers say, for example, that autistic children might literally be failing to see critical social cues. The research might also have implications for understanding traits such as extroversion and introversion: Studies suggest extroverts may view the world through rose-colored glasses, seeing the good more than the bad.

Not-so-objective recognition

Scientists have long been interested in what role emotions play in recognizing objects, a process of perception that involves both collecting visual information about the world and higher-order workings of the brain.

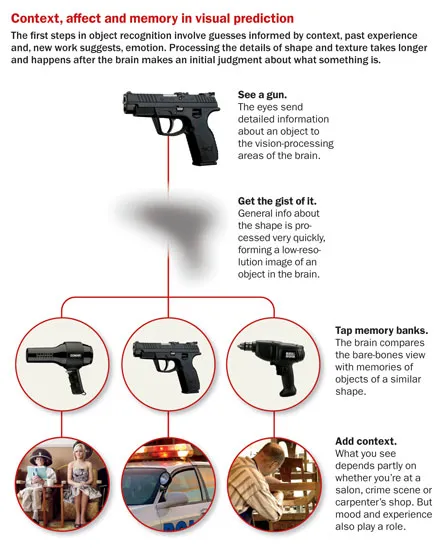

It takes a few hundred milliseconds for the brain to come to a final decision about what the eyes see. But some basic information about an object — its shape and position in front of the viewer — are sent to the brain at warp speed. The general shape of a gun, for example, is similar to that of a hair dryer or power drill. Only with the additional processing time will the brain be able to confirm which of the three it is looking at. In the meantime, the get-it-quick part of the brain’s visual-processing system can send enough information for the brain to take a good, if not always correct, guess at what it’s seeing. This guessing process, called visual prediction, is attracting attention from scientists interested in understanding if and how emotion influences visual perception.

In the traditional view, perception, judgment and emotions are considered separate processes, with emotions coming last in the procession. One perceives. One judges, using reason, what best to do with the information collected. And one keeps one’s emotions, as much as possible, out of the picture.

But in the early 1980s, some researchers began to think that affect plays an earlier role in object recognition. Researchers were struck by experiments in which people seemed to feel an emotion without being able to identify the object that had elicited it. In 1980 the late psychologist Robert Zajonc, then at the University of Michigan in Ann Arbor, wrote that it is possible that people can “like something or be afraid of it before [they] know precisely what it is.” Other psychologists, including the late Richard Lazarus of the University of California, Berkeley, continued to advocate the traditional view. They held that rational thought was still the prime mechanism for identifying a baseball or telling an umbrella from a tree, and that emotion played a role only after visual processing was essentially complete.

Then work showed that emotions were important for a variety of processes that, like vision, had previously been thought to belong solely to the realm of cognition. In the mid-1990s, studies began to suggest that decision making, long held to be a prime example of a mental process best accomplished without the interference of emotions, could not only benefit from emotions, but also might require them.

Affect helps the brain weigh the value of objects, letting people know how to use or interact with what they encounter in the world. Simply seeing a banana does not tell you that you like bananas and want to eat one. The vision-processing areas of the brain are very “Spock-like,” Bar says. They don’t know what is good or bad, or important or unimportant. In the parlance of psychologists, it is affect that conveys that information.

“Without affect you might be dead very quickly,” Bar says.

Affected perception

Whereas emotions describe complex states of mind, such as anger or happiness, affect refers to something much more basic. Psychologists describe it as a bodily response that is experienced as pleasant or unpleasant, comfortable or uncomfortable, a feeling of being tired in the morning or wound up at night. These responses are the ingredients for emotions, but they also serve as less complex feelings that people experience even when they think they feel “nothing.”

“Affect is the color of our world,” says psychologist Eliza Bliss-Moreau of the University of California, Davis.

Scientists are now learning that affect may play a fundamental role in object perception, regardless of whether the objects in question are “affective” or not. An inherently emotion-laden object like a gun or a basket of puppies — or a snake — would be expected to excite the emotion-processing areas of the brain. But even “neutral” objects such as traffic lights and wristwatches have been shown to trigger a certain amount of affect in people. For example, in a 2006 study published in Psychological Science, Bar and his then Harvard colleague Maital Neta showed that people appear to prefer smooth, curved objects over sharp-edged ones, though the people tested could not always articulate a reason for their preference.

“It has become pretty clear in the last 20 years that our judgments and ability to judge neutral information is contaminated — affected — by emotional values, by affective influences,” Bar says.

Affect has also been shown to play a role in calling the brain’s attention to important versus trivial objects, especially in terms of vision. Affect helps the brain prioritize.

“You enter a new scene, and there are vast amounts of information to analyze,” Bar says. “Now you’re drinking from a fire hydrant.” People need something to tell them what is important. “If something looks sharp or snakelike or menacing, you stop analyzing the surface texture of the sofa,” he says. Something with greater emotional relevance — positive or negative — takes priority.

The brain pays more attention to objects that evoke an affective response than it does to objects that don’t have that “extra juice,” says cognitive neuroscientist Luiz Pessoa of Indiana University Bloomington.

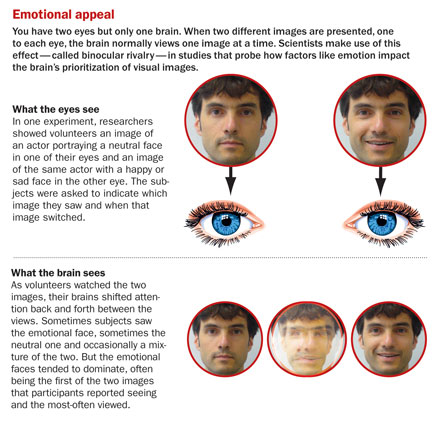

In binocular rivalry studies, two different images are shown to a viewer, one image to each eye. Since the brain normally sees only one object at a time, attention switches back and forth between the two images. But in studies in which one of the two objects evokes an emotional response while the other does not (say, a fearful face and a neutral one), the evocative object holds the brain’s attention longer. People actually report seeing that object more often than the neutral one. Writing in a 2006 issue of Cognition and Emotion, Georg Alpers and Paul Pauli of the University of Würzburg in Germany showed that objects associated with a positive or negative affect were seen first and for longer periods of time in the binocular rivalry studies than objects that evoked no emotion.

In Emotion in 2007, Alpers and his Würzburg colleague Antje Gerdes reported similar results for study volunteers viewing faces showing emotion versus faces with neutral expressions.

Studies from Barrett’s group have recently gone a step further. Imbuing an object that normally would not evoke a feeling with an emotional charge in the laboratory gives the object an attentional boost, her team has found. In a paper published in Emotion in 2008, Bliss-Moreau, Barrett and Christopher Wright of Massachusetts General Hospital showed that when participants were shown emotionally neutral faces accompanied by a sentence of gossip, those faces elicited an affective response in participants. For instance, the face of a man who volunteers had been told once defecated on a crowded street was recognized faster than equally nonexpressive faces linked with more benign gossip.

A key question for many in the field is whether this redirection of the brain’s resources happens early in the process of detection or later. Getting involved earlier could mean that affect helps the brain see things before they have been consciously seen. Affect could help the brain make predictions.

Guessing games

Your brain is constantly making predictions, many scientists think. But these are not the kind of predictions that let you bet on the winning horse or correctly divine that a meteor will hit the Earth in six years on a Thursday. It’s a more basic, everyday kind of prediction, based on memories of past experience and available evidence.

Some of the best support for the view that affect might be involved in visual predictions, Barrett says, comes from the physical layout of the brain. Just as highways on a map show well-traveled routes between cities, nerve fiber pathways linking different parts of the brain hint at where high volumes of information pass through. In the human brain, one hub where nerve highways converge is the orbitofrontal cortex, or OFC, which lies behind the eyes.

The OFC has strong connections to the “get-the-gist-of-it” visual pathways that enable a person to identify low-level visual features of an object quickly. This hub also has connections to areas that bring in information about how the body is feeling, whether it is excited or uncomfortable or numb or in pain. Given these connections, the OFC could mediate an interaction between the quick, get-the-gist-of-it part of the visual pathway and the “how am I feeling right now?” parts of the brain, Bar and Barrett argue in their May paper.

And this connection might help the brain come up with a rough sense of what an object is before lower-level processing areas have finished passing on all that information to higher-level ones. This, Bar says, could be the route to a visual prediction.

Timing is also an issue. If affect has an early influence on perception — within the first 60 to 120 milliseconds after a person’s eyes see an object — then it could indeed have an effect on the brain’s predictions. But studies that try to gauge the speed of the brain’s affective response have produced a range of results, showing activity as early as 30 milliseconds and as late as more than 200 milliseconds after a visual stimulus is shown. The variability has made it difficult to pin down exactly when affect may play a role, Pessoa says.

Still, some studies do suggest an early role for emotion in vision. Pessoa and his Indiana University colleague Srikanth Padmala led a study that trained volunteers to associate an emotion with a textured pattern by pairing an image of the pattern with a mild electric shock. Volunteers were then shown these textured patterns along with other, novel patterns that had not been associated with the shocks. Researchers observed the volunteers’ brain activity using functional MRI as both sets of patterns were shown faintly on a screen for a mere 50 milliseconds.

The subjects said they did not see the affect-free patterns but did report seeing the patterns that had been paired with the electric shocks. Functional MRI confirmed the reports: Early vision-processing areas of the brain that are involved in the first levels of identifying objects were indeed lighting up when people said they saw the patterns, the researchers reported in 2008 in the Journal of Neuroscience.

People’s moods also may influence what they see and don’t see. Barrett’s group is now investigating how a person’s mood impacts whether faces showing positive or negative emotion get more or less attention. In an ongoing binocular rivalry study, researchers put people into either a negative or positive state of mind by showing them disturbing or uplifting pictures, or asking them to remember a sad or happy experience. Subjects are then shown an image of an emotionally expressive face in one eye and a neutral house in the other. Preliminary results suggest that people in a low mood see all the faces, both positive and negative, instead of the house. But people in a good mood see the happier faces instead of the house more often than they see the sad or angry ones. The results suggest that changing people’s moods may change how the brain processes information.

Visual impact

To Barrett, getting a better handle on the relationship between seeing and feeling has the potential to improve understanding of many different disorders. “You can’t find a mental illness that doesn’t involve some problem with affect,” she says.

For example, some researchers think autism might be related to an inability to pay attention to the right social cues. “It’s possible that kids with autism have checked out of the social environment because they physically don’t see social cues,” says Bliss-Moreau, who is part of a team that studies macaques with brain lesions to learn about disorders such as autism. “Or they see the cues but don’t encode them correctly.”

For people with autism, a facial expression showing emotion may not have the same value and meaning that most others learn to associate with it, and as a result may not dominate the vision-processing centers of the brain as much as it would in a person without the disorder.

Anxiety, depression and even extroversion and introversion could be far better explained in terms of what people perceive, rather than in terms of their behavior, Bliss-Moreau contends.

After all, it appears that whether you see the stick or the snake depends on your mood as well as your past experiences.

“How you sample information from the world, and understand what it means, is going to be different” depending on all these factors, Barrett says. “It’s literally going to change what you see.”