Meet the Growbots

Social robots take baby steps toward humanlike smarts

Boldly going where most computer scientists fear to tread, Rajesh Rao watches intently as 1-year-olds lock eyes with their mothers in a developmental psychology lab at the University of Washington in Seattle. Time after time, tiny upturned heads tilt in whatever direction the caretakers look. Naturally, Rao thinks of robots.

Not many cyberspecialists would forgo motherboards for mother love. Rao’s rationale: He wants to create a robot that sponges up knowledge baby-style. Job No. 1, Rao suspects, is getting a machine to look where an experimenter looks, just as a baby homes in on an adult’s visual perspective. Evidence suggests that an ability to follow another person’s gaze emerges toward the end of a child’s first year. This subtle skill enables infants to learn what words refer to, what adults are thinking and feeling, and when to imitate what others do.

A gaze-tracking robot would have one metallic foot in the door to the inner sanctum of social intelligence.

“This would be the first step in getting a robot to interact with a human partner in order to build an internal model of what it sees in the world,” Rao says. Eventually, he predicts, such a robot would use feedback from its mechanical body and from encounters with people to revise its knowledge. The bot would learn by trial and error.

Rao’s goal deviates sharply from that of traditional artificial intelligence research. For more than 50 years, AI researchers have sought to program computers with complex rules for achieving cognitive feats that a typical 3-year-old takes for granted, such as speaking a native language and recognizing familiar faces. So far, 3-year-olds have left disembodied data crunchers in the dust.

Traditional AI has had successes, including creating robots that assemble cars and perform other complex, rote tasks. In the last five years, though, an expanding number of computer scientists have embraced developmental psychology’s proposal that infants possess basic abilities, including gaze tracking, for engaging with others in order to learn. Social interactions (SN: 5/24/03, p. 330), combined with sensory experiences gained as a child explores the world (SN: 10/25/08, p. 24), set off a learning explosion, researchers hypothesize.

Developmental psychology’s advice for producing smarter robots is simple: Make them social learners. Give machines the capacity to move about, sense the world and engage with people.

That’s a tall order. But at least two dozen labs in various countries have launched projects that use different software strategies to produce robots that learn through social interactions. Some of the latest research appears in the October-November Neural Networks.

“A robot that learned could potentially be more adaptive than a robot that had to be programmed,” says psychologist Nora Newcombe of Temple University in Philadelphia. Possible duties for social robots run the gamut from live-in nurses for the elderly to team members on interplanetary space journeys.

Some psychologists suspect that people will come to view learning robots, or “growbots,” as a new kind of social partner — not human, but alive in some respects.

Eyeing contact

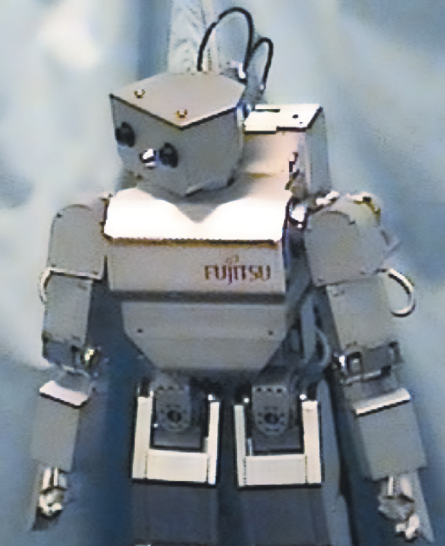

Rao hopes to transplant a babylike aptitude for gaze tracking into a 50-centimeter-tall Japanese-built robot with video cameras embedded in its black-rimmed eye openings. Software designed by Rao’s team animates the robot’s cube-shaped head, which swivels atop a chunky torso.

During trials, an instructor sits across a table from the robot and turns to look at one of several preselected objects, such as a ball. The robot tries to identify what has grabbed the person’s attention by estimating gaze angle and turning accordingly.

The robot’s motors keep track of camera positions to provide ongoing feedback about the instructor’s shifting gaze. Once focused in the right direction, the robot picks a few prime candidate objects based on features such as bright colors and sharp edges. Then the robot uses a speech synthesizer to say out loud what the instructor is looking at.

At first, the robot may mistakenly tag two overlapping items as one object or simply err in its choices. But as trials proceed, Rao’s creation builds a map of the instructor’s looking preferences that guides the machine’s choice. Thanks to this unprogrammed learning process, speed and accuracy climb dramatically.

Rao wants to see whether a robot capable of focusing on, say, a set of blocks with an instructor can learn to imitate how the instructor arranges the blocks.

“We aspire to build a robot that learns from others and from its own errors, so it could potentially assist the sick, keep the elderly company or tutor a child in a second language,” Rao says.

Scientists face a massive challenge in trying to nudge robots into humanity’s messy social world, he acknowledges. A basic conversation about the weather or work depends on a raft of cultural and linguistic assumptions, as well as a capacity to imagine what someone else has experienced and how that person feels.

Only by integrating sensations from their own mechanical bodies will robots have a shot at understanding what it means for a chair to be soft and a person to be softhearted, Rao says.

Picky learner

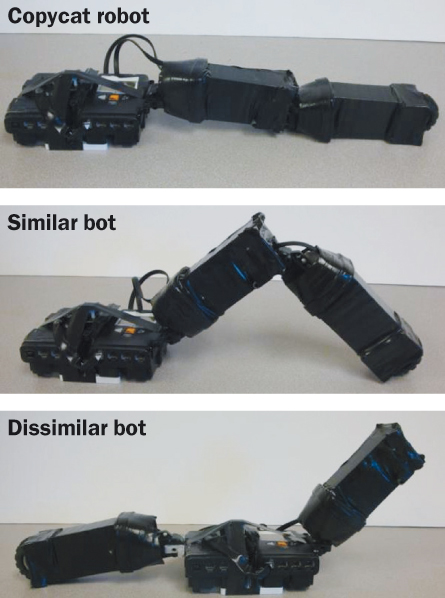

An early version of another robot undulates across Josh Bongard’s lab like a big metal inchworm. Its three flexing segments ably mirror the crawling style of a nearby robot with the same body structure, but Bongard’s bot makes no attempt to copy another creature with differently arranged body parts creeping nearby.

The wormy bot has learned to be a selective copycat, says Bongard, a computer scientist at the University of Vermont in Burlington. This metal mimic constructs a three-dimensional map of itself and uses that map to assess the possibility of imitating other robots’ movements. Several labs have designed robots that learn to copy actions of designated teacher-bots. But Bongard’s tells good teachers from bad ones, a feat that has gone largely unexplored. A machine that can discern its own potential and limitations in learning from others has an advantage when operating in an uncertain, unruly social world.

“We want to see if a robot that can select appropriate teachers will show a humanlike learning curve from imitation to more abstract achievements, such as figuring out the meaning of a teacher’s simple gestures,” Bongard says.

His student robot consists of a main body equipped with cameras that allow for binocular vision as well as two limbs, the first attached to the main body and the second to the first limb. Rotating joints connect body parts. Bongard programs the apparatus to know that it has three parts, how they’re positioned at any point in time and the properties of the parts, such as shape and size.

In response to randomly generated commands, the bot crawls about haphazardly and gradually creates an internal, visual map of its physical structure. It then observes crawling approaches taken by two other robots, one with the same body plan and one with two limbs connected to opposite sides of the main body.

Comparing its self-map with visual simulations that it creates of the two robotic teachers, the student robot imitates the crawling motions only of its structural twin, in trials done by Bongard’s team. Bongard plans to try out the mapping software in robots that have more humanlike bodies than his current crop of crawlers do.

Playing games

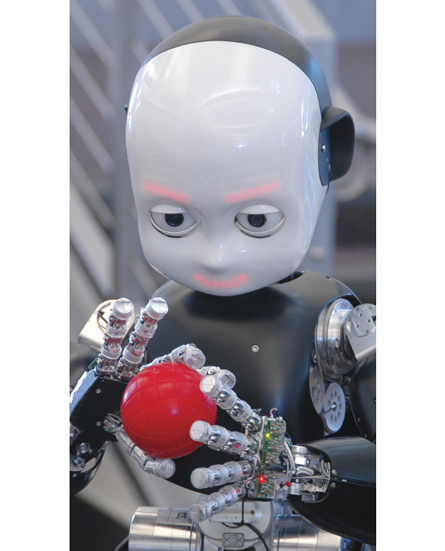

Another crawler, this time with a child’s face and dexterous, five-fingered hands, moves across a play space in computer scientist Giorgio Metta’s lab at the Italian Institute of Technology in Genoa. It’s iCub, a mechanical tyke edging toward a place where Sesame Street meets The Twilight Zone.

Twenty iCubs, each containing about 5,000 mechanical and electrical parts, now roam 11 labs in Europe and one in the United States. Researchers at these sites are collaborating to develop a robot that learns from experience how to make decisions, adapt to new surroundings and interact nimbly with people.

Each iCub stands about as tall as an average 3- to 4-year-old child and weighs 22 kilograms (almost 50 pounds), with a moving head and eyes. When not crawling, iCub can sit up and grasp objects. It possesses the robotic equivalent of sight, hearing and touch and has a sense of balance. Flexible skin is in the works.

In line with recent evidence on early childhood learning, iCub’s software brain and mechanical body allow it to discover how its actions affect objects of different types. On an indoor playground devised by Metta’s team, iCub has gradually managed to grasp, tap and touch boxes and balls of different shapes and sizes. A robot that learns to play on its own and to make increasingly sophisticated predictions about the consequences of its actions will have readied itself for tracking a person’s gaze and playing imitation games, Metta hypothesizes.

Other iCubs have learned to follow the direction in which an experimenter’s face turns or, with practice, to imitate simple actions, such as rolling a ball.

Several labs plan to put iCub through more complex social paces, such as seeing if the robot can learn to recognize words for objects that an experimenter repeatedly points to and names.

“We’re still struggling to build a robot that has robust perception and can access a wide body of knowledge through social interactions,” Metta says.

More than machines

No one knows when scientists will reach that goal, but there’s a broad consensus that growbots such as iCub will become increasingly lifelike. Some psychologists monitoring advances in social robotics suspect that people will regard even early versions of growbots as having feelings, intelligence and social rights, heralding a new way of thinking about who — or what — counts as a social partner.

Generations that grow up interacting with increasingly lifelike learning bots will recast these machines as a new category of social being, predicts University of Washington psychologist Peter Kahn. Glimmers of that transformation in thinking appear when school-age kids spend time with a rudimentary social robot named Robovie.

Kahn’s team examined how 90 children, ages 9, 12 and 15, interacted with Robovie, which carries on basic conversations and plays simple games. Kids regularly displayed signs of personally connecting with the robot as they would with a flesh-and-blood friend, Kahn says.

One girl got upset when an experimenter told Robovie to stop playing a game with her and go into a closet. Eyeing the closet, the girl said, “It looks uncomfortable,” before bidding good-bye to Robovie. The robot voiced an objection as it moved toward the closet. “Robovie, you’re just a robot,” the experimenter said. Smacking her hand on a table, the girl exclaimed, “He’s not just a robot!”

Other youngsters casually exchanged greetings and social niceties and shared personal interests with Robovie. Some offered consolation after the machine made simple mistakes and attempted to fill in awkward silences when left alone with it.

It’s possible that people will mindlessly slip into treating social robots as thinking entities for brief periods while still thinking of them as inanimate objects. Or people may pretend that growbots are alive while dealing with them, much as children play pretend games with stuffed animals.

Psychologist Jennifer Jipson of California Polytechnic State University in San Luis Obispo considers it more likely that people of all ages will invent a new way to think about lifelike robots. In a 2007 study, she and a colleague found that 52 preschoolers, ages 3 to 5, generally regarded a robotic dog that they had watched playing with an adult on videos as having thoughts, feelings and eyesight, although the kids did not see it as a biological creature that ate or slept.

The mechanical pooch — far more so than stuffed animals, toy cars or real animals — was described as having psychological but not biological traits.

“Children truly seem to reason about social robots in unique ways,” Jipson says.

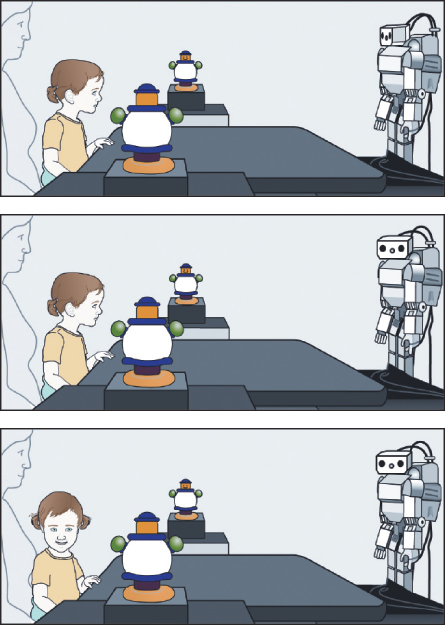

Even infants take a shine to social robots, contends University of Washington psychologist Andrew Meltzoff. By age 18 months, he says, most youngsters who watch a robot play a simple imitation game with an adult want to later look where that robot looks. If the robot turns its head toward a clown toy, these kids assiduously follow the robot’s gaze, Meltzoff and his colleagues find.

In Meltzoff’s imitation game, an experimenter asks a small robot to play and the robot nods yes. The robot then touches its head and torso when asked and mimics the experimenter’s arm movements and a few other actions.

Infants showed much less interest in tracking the robot’s gaze after having watched it perform the same actions with an unmoving experimenter, or with an experimenter who didn’t react in a synchronized way typical of game playing.

Kids barely old enough to talk assume that robots engaged in a real-looking interaction know what they’re doing, Meltzoff proposes. “Just by eavesdropping, babies learn that a robot is a social agent,” he says. “This also suggests that infants, perhaps by their first birthdays, watch others across the dinner table and learn from social interactions that they observe.”

Meltzoff plans to see if 18-month-olds who eavesdrop on robot-experimenter games will later test the robot for signs of intelligence, say by holding up a toy and looking at it while peeking to see if the robot does the same.

If that scenario plays out, babies and bots will have joined forces in tracking each other’s gaze. A robot capable of naming a toy for an infant would then become a cybernetic teacher.

“We’d enter a brave new social world,” Meltzoff muses.

Becoming human(like)

A 2007 paper in the journal Interaction Studies sets out criteria for judging how “human” robots seem to a person interacting with them. Such criteria could be used to assess progress in the field of social robotics.

Autonomy A humanlike robot would appear to have a level of independence, while still considering the wants and needs of others and the larger society.

Imitation A successful robot would appear to actively imitate people — not just blindly mimicking behaviors but projecting its understanding of itself onto others so as to come to understand them too.

Intrinsic moral value People would see a truly humanlike robot as having some intrinsic value, along with rights and desires, and care for it as such.

Moral accountability A humanlike robot would appear to have responsibility for its behavior, and thus would be deserving of blame when it acted unfairly or caused harm.

Privacy People can feel that their privacy has been violated when another person uncovers personal information, even if that information is never shared or used. A humanlike robot that looks through e-mail or tracks a person’s comings and goings would engender similar feelings of privacy invasion.

Reciprocity A humanlike robot would engage in reciprocal relationships in which it adjusts its expectations and desires. Such a robot would, for example, bargain at a yard sale or compromise to pick a movie when there is disagreement.

Conventionality When dealing with other people, even children can distinguish between actions that break convention, such as calling teachers by first names, and those that are morally wrong. People would make such distinctions for a humanlike robot too.

Creativity People would partner and interact with a truly humanlike robot in enterprises requiring skill and imagination.

Authenticity of relation People would not think about “using” a robot the way they “use” a computer, but would instead think about engaging with a partner that can experience and react.