To calculate the movements of a mere 600 atoms in an explosion-produced mixture of

hydrogen fluoride and water vapor for 1 trillionth of a second, scientists have

had to tie up the most powerful supercomputer available for about 15 days. With

the unveiling last week of the computer dubbed ASCI White, they have a machine

that can perform the task much more quickly.

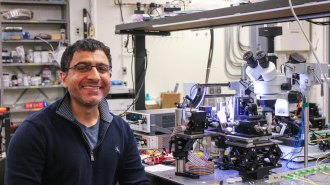

Housed at the Lawrence Livermore (Calif.) National Laboratory (LLNL), ASCI White

is a product of the National Nuclear Security Administration’s Accelerated

Strategic Computing Initiative (ASCI). The initiative is part of an effort to

assess the safety and reliability of nuclear weapons without underground testing

(SN: 10/19/96, p. 254). ASCI White represents just one of several new efforts to

increase the computer power available to scientists.

Built by IBM from 8,192 of the company’s commercially available processors, the

$110 million computer can do 12.3 trillion operations per second, more than four

times the peak rate previously attainable (SN: 11/12/98, p. 383). The machine

represents the latest step in the drive to reach 100 trillion calculations per

second by 2005.

To bring comparable computational power to a wider pool of researchers, the

National Science Foundation earlier this month announced a $53 million program to

connect existing supercomputers at four sites into a single computing resource.

Called the Distributed Terascale Facility, or TeraGrid, the system will give

researchers access to large data archives, remote instruments, sophisticated

visualization tools, and other resources via high-speed networks.

“The TeraGrid will be a far more powerful and flexible scientific tool than any

single supercomputing system,” says Fran Berman of the San Diego Supercomputer

Center, one of TeraGrid’s participants. By April 2003, researchers should be able

to tap into a combined system running at more than 11.6 trillion operations per

second.

In yet another supercomputing initiative, the Department of Energy last week

awarded a total of $57 million in research grants as part of its program to

develop the software and hardware needed to use advanced computers effectively for

scientific research.

The Fermi National Accelerator Laboratory in Batavia, Ill., and its partners, for

example, expect to receive $3.84 million over 3 years to develop systems that

would allow physicists to receive up-to-the-minute data from particle accelerator

experiments anywhere in the world. Current experiments already generate far more

data than the World Wide Web can handle. Another aim of the new Fermilab program

is to develop software that will streamline accelerator operation and design.