How matter’s hidden complexity unleashed the power of nuclear physics

In the last century, physicists have revealed a complex world of fundamental particles

Calder Hall, in Cumbria, England, was the first full-scale commercial nuclear power plant (shown in 1962).

Bettmann/Getty Images

Matter is a lush tapestry, woven from a complex assortment of threads. Diverse subatomic particles weave together to fabricate the universe we inhabit. But a century ago, people believed that matter was so simple that it could be constructed with just two types of subatomic fibers — electrons and protons. That vision of matter was a no-nonsense plaid instead of an ornate brocade.

Physicists of the 1920s thought they had a solid grasp on what made up matter. They knew that atoms contained electrons surrounding a positively charged nucleus. And they knew that each nucleus contained a number of protons, positively charged particles identified in 1919. Combinations of those two particles made up all of the matter in the universe, it was thought. That went for everything that ever was or might be, across the vast, unexplored cosmos and at home on Earth.

The scheme was appealingly tidy, but it swept under the rug a variety of hints that all was not well in physics. Two discoveries in one revolutionary year, 1932, forced physicists to peek underneath the carpet. First, the discovery of the neutron unlocked new ways to peer into the hearts of atoms and even split them in two. Then came news of the positron, identical to the electron but with the opposite charge. Its discovery foreshadowed many more surprises to come. Additional particle discoveries ushered in a new framework for the fundamental bits of matter, now known as the standard model.

That “annus mirabilis” — miraculous year — also set physicists’ sights firmly on the workings of atoms’ hearts, how they decay, transform and react. Discoveries there would send scientists careening toward a most devastating technology: nuclear weapons. The atomic bomb cemented the importance of science — and science journalism — in the public eye, says nuclear historian Alex Wellerstein of the Stevens Institute of Technology in Hoboken, N.J. “The atomic bomb becomes the ultimate proof that … indeed this is world-changing stuff.”

Two-particle appeal

Physicists of the 1920s embraced a particular type of conservatism. Embedded deep in their psyches was a reluctance to declare the existence of new particles. Researchers stuck to the status quo of matter composed solely of electrons and protons — an idea dubbed the “two-particle paradigm” that held until about 1930. In that time period, says historian of science Helge Kragh of the University of Copenhagen, “I’m quite sure that not a single mainstream physicist came up with the idea that there might exist more than two particles.” The utter simplicity of two particles explaining everything in nature’s bounty was so appealing to physicists’ sensibilities that they found the idea difficult to let go of.

The paradigm held back theoretical descriptions of the neutron and the positron. “To propose the existence of other particles was widely regarded as reckless and contrary to the spirit of Occam’s razor,” science biographer Graham Farmelo wrote in Contemporary Physics in 2010.

Still, during the early 20th century, physicists were investigating a few puzzles of matter that would, after some hesitation, inevitably lead to new particles. These included unanswered questions about the identities and origins of energetic particles called cosmic rays, and why chemical elements occur in different varieties called isotopes, which have similar chemical properties but varying masses.

We summarize the week's scientific breakthroughs every Thursday.

The neutron arrives

New Zealand–born British physicist Ernest Rutherford stopped just short of positing a fundamentally new particle in 1920. He realized that neutral particles in the nucleus could explain the existence of isotopes. Such particles came to be known as “neutrons.” But rather than proposing that neutrons were fundamentally new, he thought they were composed of protons combined in close proximity with electrons to make neutral particles. He was correct about the role of the neutron, but wrong about its identity.

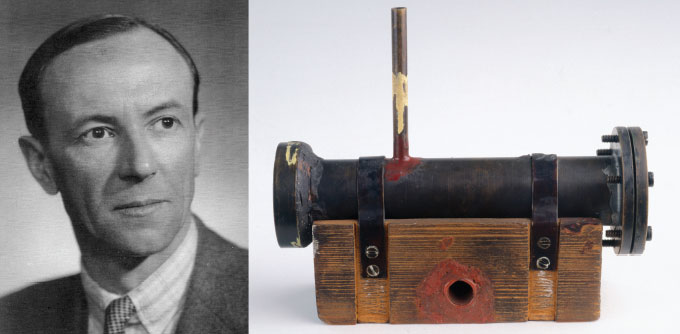

Rutherford’s idea was convincing, British physicist James Chadwick recounted in a 1969 interview: “The only question was how the devil could one get evidence for it.” The neutron’s lack of electric charge made it a particularly wily target. In between work on other projects, Chadwick began hunting for the particles at the University of Cambridge’s Cavendish Laboratory, then led by Rutherford.

Chadwick found his evidence in 1932. He reported that mysterious radiation emitted when beryllium was bombarded with the nuclei of helium atoms could be explained by a particle with no charge and with a mass similar to the proton’s. In other words, a neutron. Chadwick didn’t foresee the important role his discovery would play. “I am afraid neutrons will not be of any use to anyone,” he told the New York Times shortly after his discovery.

Physicists grappled with the neutron’s identity over the following years before accepting it as an entirely new particle, rather than the amalgamation that Rutherford had suggested. For one, a proton-electron mash-up conflicted with the young theory of quantum mechanics, which characterizes physics on small scales. The Heisenberg uncertainty principle — which states that if the location of an object is well-known, its momentum cannot be — suggests that an electron confined within a nucleus would have an unreasonably large energy.

And certain nuclei’s spins, a quantum mechanical measure of angular momentum, likewise suggested that the neutron was a full-fledged particle, as did improved measurements of the particle’s mass.

Positron perplexity

Physicists also resisted the positron, until it became difficult to ignore.

The positron’s 1932 detection had been foreshadowed by the work of British theoretical physicist Paul Dirac. But it took some floundering about before physicists realized the meaning of his work. In 1928, Dirac formulated an equation that combined quantum mechanics with Albert Einstein’s 1905 special theory of relativity, which describes physics close to the speed of light. Now known simply as the Dirac equation, the expression explained the behavior of electrons in a way that satisfied both theories.

But the equation suggested something odd: the existence of another type of particle, one with the opposite electric charge. At first, Dirac and other physicists clung to the idea that this charged particle might be the proton. But this other particle should have the same mass as the electron, and protons are almost 2,000 times as heavy as electrons. In 1931, Dirac proposed a new particle, with the same mass as the electron but with opposite charge.

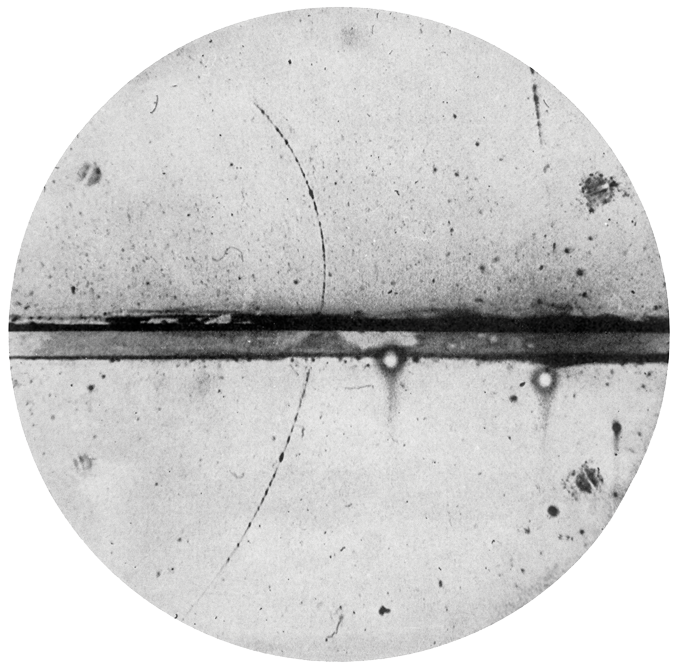

Meanwhile, American physicist Carl Anderson of Caltech, independent of Dirac’s work, was using a device called a cloud chamber to study cosmic rays, energetic particles originating in space. Cosmic rays, discovered in 1912, fascinated scientists, who didn’t fully understand what the particles were or how they were produced.

Within Anderson’s chamber, liquid droplets condensed along the paths of energetic charged particles, a result of the particles ionizing gas molecules as they zipped along. In 1932, the experiments revealed positively charged particles with masses equal to an electron’s. Soon, the connection to Dirac’s theory became clear.

Science News Letter, the predecessor of Science News, had a hand in naming the newfound particle. Editor Watson Davis proposed “positron” in a telegram to Anderson, who had independently considered the moniker, according to a 1933 Science News Letter article (SN: 2/25/33, p. 115). In a 1966 interview, Anderson recounted considering Davis’ idea during a game of bridge, and finally going along with it. He later regretted the choice, saying in the interview, “I think that’s a very poor name.”

The discovery of the positron, the antimatter partner of the electron, marked the advent of antimatter research. Antimatter’s existence still seems baffling today. Every object we can see and touch is made of matter, making antimatter seem downright extraneous. Antimatter’s lack of relevance to daily life — and the term’s liberal use in Star Trek — means that many nonscientists still envision it as the stuff of science fiction. But even a banana sitting on a counter emits antimatter, periodically spitting out positrons in radioactive decays of the potassium within.

Physicists would go on to discover many other antiparticles — all of which are identical to their matter partners except for an opposite electric charge — including the antiproton in 1955. The subject still keeps physicists up at night. The Big Bang should have produced equal amounts matter and antimatter, so researchers today are studying how antimatter became rare.

In the 1930s, antimatter was such a leap that Dirac’s hesitation to propose the positron was understandable. Not only would the positron break the two-particle paradigm, but it would also suggest that electrons had mirror images with no apparent role in making up atoms. When asked, decades later, why he had not predicted the positron after he first formulated his equation, Dirac replied, “pure cowardice.”

But by the mid-1930s, the two-particle paradigm was out. Physicists’ understanding had advanced, and their austere vision of matter had to be jettisoned.

Unleashing the atom’s power

Radioactive decay hints that atoms hold stores of energy locked within, ripe for the taking. Although radioactivity was discovered in 1896, that energy long remained an untapped resource. The neutron’s discovery in the 1930s would be key to unlocking that energy — for better and for worse.

The neutron’s discovery opened up scientists’ understanding of the nucleus, giving them new abilities to split atoms into two or transform them into other elements. Developing that nuclear know-how led to useful technologies, like nuclear power, but also devastating nuclear weapons.

Just a year after the neutron was found, Hungarian-born physicist Leo Szilard envisioned using neutrons to split atoms and create a bomb. “[I]t suddenly occurred to me that if we could find an element which is split by neutrons and which would emit two neutrons when it absorbed one neutron, such an element, if assembled in sufficiently large mass, could sustain a nuclear chain reaction, liberate energy on an industrial scale, and construct atomic bombs,” he later recalled. It was a fledgling idea, but prescient.

Because neutrons lack electric charge, they can penetrate atoms’ hearts. In 1934, Italian physicist Enrico Fermi and colleagues started bombarding dozens of different elements with neutrons, producing a variety of new, radioactive isotopes. Each isotope of a particular element contains a different number of neutrons in its nucleus, with the result that some isotopes may be radioactive while others are stable. Fermi had been inspired by another striking discovery of the time. In 1934, French chemists Frédéric and Irène Joliot-Curie reported the first artificially created radioactive isotopes, produced by bombarding elements with helium nuclei, called alpha particles. Now, Fermi was doing something similar, but with a more penetrating probe.

There were a few scientific missteps on the way to understanding the results of such experiments. A major goal was to produce brand-new elements, those beyond the last known element on the periodic table at that time: uranium. After blasting uranium with neutrons, Fermi and colleagues reported evidence of success. But that conclusion would turn out to be incorrect.

German chemist Ida Noddack had an inkling that all was not right with Fermi’s interpretation. She came close to the correct explanation for his experiments in a 1934 paper, writing: “When heavy nuclei are bombarded by neutrons, it is conceivable that the nucleus breaks up into several large fragments.” But Noddack didn’t follow up on the idea. “She didn’t provide any kind of supporting calculation and nobody took it with much seriousness,” says physicist Bruce Cameron Reed of Alma College in Michigan.

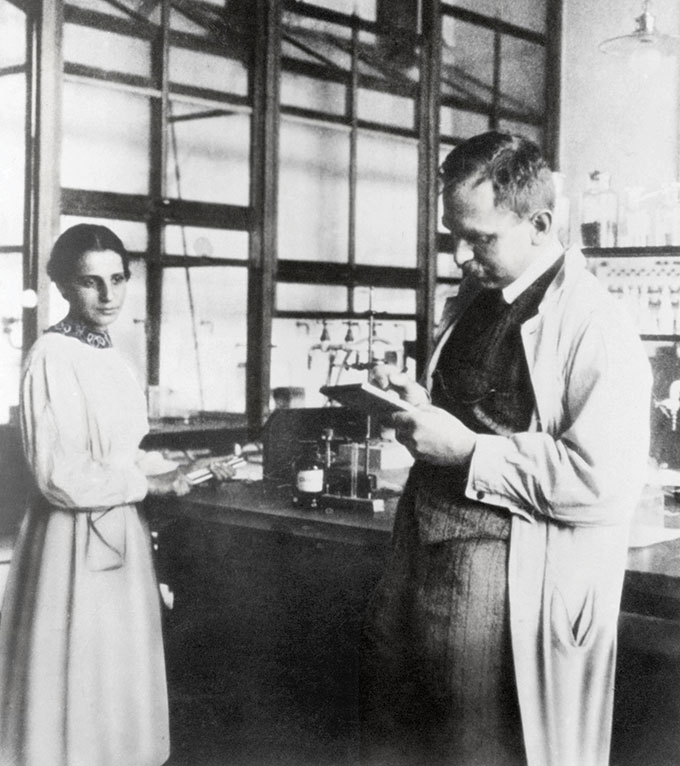

In Germany, physicist Lise Meitner and chemist Otto Hahn had also begun bombarding uranium with neutrons. But Meitner, an Austrian of Jewish heritage in increasingly hostile Nazi Germany, was forced to flee in July 1938. She had an hour and a half to pack her suitcases. Hahn and a third member of the team, chemist Fritz Strassmann, continued the work, corresponding from afar with Meitner, who had landed in Sweden. The results of the experiments were puzzling at first, but when Hahn and Strassmann reported to Meitner that barium, a much lighter element than uranium, was a product of the reaction, it became clear what was happening. The nucleus was splitting.

Meitner and her nephew, physicist Otto Frisch, collaborated to explain the phenomenon, a process the pair would call “fission.” Hahn received the 1944 Nobel Prize in chemistry for the discovery of fission, but Meitner never won a Nobel, in a decision now widely considered unjust. Meitner was nominated for the prize — sometimes in physics, other times in chemistry — a whopping 48 times, most after the discovery of fission.

“Her peers in the physics community recognized that she was part of the discovery,” says chemist Ruth Lewin Sime of Sacramento City College in California, who has written extensively about Meitner. “That included just about anyone who was anyone.”

Word of the discovery soon spread, and on January 26, 1939, renowned Danish physicist Niels Bohr publicly announced at a scientific meeting that fission had been achieved. The potential implications were immediately apparent: Fission could unleash the energy stored in atomic nuclei, potentially resulting in a bomb. A Science News Letter story describing the announcement attempted to dispel any concerns the discovery might raise. The article, titled “Atomic energy released,” reported that scientists “are fearful lest the public become worried about a ‘revolution’ in civilization as a result of their researches,” such as “the suggested possibility that the atomic energy may be used as some super-explosive, or as a military weapon” (SN: 2/11/39, p. 86). But downplaying the catastrophic implications didn’t prevent them from coming to pass.

A ball of fire

The question of whether a bomb could be created rested, once again, on neutrons. For fission to ignite an explosion, it would be necessary to set off a chain reaction. That means each fission would release additional neutrons, which could then go on to induce more fissions, and so on. Experiments quickly revealed that enough neutrons were released to make such a chain reaction feasible.

In October 1939, soon after Germany invaded Poland at the start of World War II, an ominous letter from Albert Einstein reached President Franklin Roosevelt. Composed at the urging of Szilard, by then at Columbia University, the letter warned, “it is conceivable … that extremely powerful bombs of a new type may thus be constructed.” American researchers were not alone in their interest in the topic: German scientists, the letter noted, were also on the case.

Roosevelt responded by setting up a committee to investigate. That step would be the first toward the U.S. effort to build an atomic bomb, the Manhattan Project.

On December 2, 1942, Fermi, who by then had immigrated to the United States, and 48 colleagues achieved the first controlled, self-sustaining nuclear chain reaction in an experiment with a pile of uranium and graphite at the University of Chicago. Science News Letter would later call it “an event ranking with man’s first prehistoric lighting of a fire”. While the physicists celebrated their success, the possibility of an atomic bomb was closer than ever. “I thought this day would go down as a black day in the history of mankind,” Szilard recalled telling Fermi.

The experiment was a key step in the Manhattan Project. And on July 16, 1945, at about 5:30 a.m., scientists led by J. Robert Oppenheimer detonated the first atomic bomb, in the New Mexico desert — the Trinity test.

It was a striking sight, as physicist Isidor Isaac Rabi recalled in his 1970 book, Science: The Center of Culture. “Suddenly, there was an enormous flash of light, the brightest light I have ever seen or that I think anyone has ever seen. It blasted; it pounced; it bored its way right through you. It was a vision which was seen with more than the eye. It was seen to last forever. You would wish it would stop; although it lasted about two seconds. Finally it was over, diminishing, and we looked toward the place where the bomb had been; there was an enormous ball of fire which grew and grew and it rolled as it grew; it went up into the air, in yellow flashes and into scarlet and green. It looked menacing. It seemed to come toward one. A new thing had just been born; a new control; a new understanding of man, which man had acquired over nature.”

Physicist Kenneth Bainbridge put it more succinctly: “Now we are all sons of bitches,” he said to Oppenheimer in the moments after the test.

The bomb’s construction was motivated by the fear that Germany would obtain it first. But the Germans weren’t even close to producing a bomb when they surrendered in May 1945. Instead, the United States’ bombs would be used on Japan. On August 6, 1945, the United States dropped an atomic bomb on Hiroshima, followed by another on August 9 on Nagasaki. In response, Japan surrendered. More than 100,000 people died as a result of the two attacks, and perhaps as many as 210,000.

“I saw a blinding bluish-white flash from the window. I remember having the sensation of floating in the air,” survivor Setsuko Thurlow recalled in a speech given upon the awarding of the 2017 Nobel Peace Prize to the International Campaign to Abolish Nuclear Weapons. She was 13 years old when the bomb hit Hiroshima. “Thus, with one bomb my beloved city was obliterated. Most of its residents were civilians who were incinerated, vaporized, carbonized.”

Nuclear anxieties

Humankind entered a new era, with new dangers to the survival of civilization. “With nuclear physics, you have something that within 10 years … goes from being this arcane academic research area … to something that bursts on the world stage and completely changes the relationship between science and society,” Reed says.

In 1949, the Soviet Union set off its first nuclear weapon, kicking off the decades-long nuclear rivalry with the United States that would define the Cold War. And then came a bigger, more dangerous weapon: the hydrogen bomb. Whereas atomic bombs are based on nuclear fission, H-bombs harness nuclear fusion, the melding of atomic nuclei, in conjunction with fission, resulting in much larger blasts. The first H-bomb, detonated by the United States in 1952, was 1,000 times as powerful as the bomb dropped on Hiroshima. Within less than a year, the Soviet Union also tested an H-bomb. The H-bomb had been called a “weapon of genocide” by scientists serving on an advisory committee for the U.S. Atomic Energy Commission, which had previously recommended against developing the technology.

Fears of the devastation that would result from an all-out nuclear war have fed repeated attempts to rein in nuclear weapons stockpiles and tests. Since the signing of the Comprehensive Nuclear Test Ban Treaty in 1996, the United States, Russia and many other countries have maintained a testing moratorium. However, North Korea tested a nuclear weapon as recently as 2017.

Still, the dangers of nuclear weapons were accompanied by a promising new technology: nuclear power.

In 1948, scientists first demonstrated that a nuclear reactor could harness fission to produce electricity. The X-10 Graphite Reactor at Oak Ridge National Laboratory in Tennessee generated steam that powered an engine that lit up a small Christmas lightbulb. In 1951, Experimental Breeder Reactor-I at Idaho National Laboratory near Idaho Falls produced the first usable amount of electricity from a nuclear reactor. The world’s first commercial nuclear power plants began to switch on in the mid- and late 1950s.

But nuclear disasters dampened enthusiasm for the technology, including the 1979 Three Mile Island accident in Pennsylvania and the 1986 Chernobyl disaster in Ukraine, then part of the Soviet Union. In 2011, the disaster at the Fukushima Daiichi power plant in Japan rekindled society’s smoldering nuclear anxieties. But today, in an era when the effects of climate change are becoming alarming, nuclear power is appealing because it emits no greenhouse gases directly.

And humankind’s mastery over matter is not yet complete. For decades, scientists have been dreaming of another type of nuclear power, based on fusion, the process that powers the sun. Unlike fission, fusion power wouldn’t produce long-lived nuclear waste. But progress has been slow. The ITER experiment has been in planning since the 1980s. Once constructed in southern France, ITER aims to, for the first time, produce more energy from fusion than is put in. Whether it is successful may help determine the energy outlook for future centuries.

From today’s perspective, the breakneck pace of progress in nuclear and particle physics in less than a century can seem unbelievable. The neutron and positron were both found in laboratories that are small in comparison with today’s, and each discovery was attributed to a single physicist, relatively soon after the particles had been proposed. Those discoveries kicked off frantic developments that seemed to roll in one after another.

Now, finding a new element, discovering a new elementary particle or creating a new type of nuclear reactor can take decades, international collaborations of thousands of scientists, and huge, costly experiments.

As physicists uncover the tricks to understanding and controlling nature, it seems, the next level of secrets becomes increasingly difficult to expose.