STORM TECH Forecasters hope to get a better handle on when storms will strike and where snow will fall.

Kelly Delay/Flickr (CC BY-NC 2.0)

In late January, a massive snowstorm drifted toward New York City. Meteorologists warned that a historic blizzard could soon cripple the Big Apple, potentially burying the city under 60 centimeters of snow overnight. Governor Andrew Cuomo took drastic action, declaring a state of emergency for several counties and shutting down the city that never sleeps. For the first time in its 110-year history, the New York City Subway closed for snow.

As the hours wore on, however, the overhyped snowfall never materialized. The storm hit hard to the east but just grazed the city with a manageable 25 centimeters of fresh powder. When Cuomo took grief for overreacting, many meteorologists sympathized. A meteorological misjudgment of just a few minutes or miles can mislead officials, trigger poor decisions and bring on public jeers. But underestimating a storm’s impact may put people in peril. The answer is to make weather forecasts more precise, both in time and space.

Unfortunately, that’s exceedingly difficult. Despite years of improvements in predictions, weather gurus may never be able to deliver accurate daily forecasts further ahead than they can now. So they’re switching gears. Instead of pushing predictions further into the future, they are harnessing technology to move weather forecasting into the hyperlocal.

By incorporating clever computing, statistical wizardry and even smartphones, future forecasts may offer personalized predictions for areas as narrow as 10 Manhattan city blocks over timescales of just a few minutes. The work could one day provide earlier warnings for potentially deadly storms and even resolve personal conundrums such as whether to grab an umbrella for a run to the coffee shop or to wait a few minutes because the rain will soon let up.

“We’re entering a promising era for small-scale forecasting,” says meteorologist Lans Rothfusz of the National Oceanic and Atmospheric Administration’s National Severe Storms Laboratory in Norman, Okla. “The sky’s the limit, no pun intended.”

Atmospheric chaos

Weather is so complex that to predict it with any precision, forecasters need robust information about what the weather is doing right now. A worldwide battalion of planes, ships, weather stations, balloons, satellites and radar networks streams details of nearly every facet of the atmosphere to meteorologists. “In the meteorology business, we’ll take all the data we can get,” Rothfusz says.

Measurements such as atmospheric pressure, temperature, wind speed and humidity feed into data assimilation software that compiles a 3-D reconstruction of the atmosphere. Where no measurements exist, the software uses nearby data to intelligently fill in the gaps. Once the reconstruction is complete, the computer essentially presses fast-forward. Numerical calculations simulate the future behavior of the atmosphere to predict weather conditions hours or days in advance. For instance, a rising pocket of moist, warm air might be expected to condense into a storm cloud and be blown from an area with high atmospheric pressure to a low-pressure region.

The goal is to provide a useful, or “skilled,” prediction. A forecast is said to have skill if its prediction is more accurate than the historical average for that area on that date. Even using cutting-edge supercomputers and a global network of weather stations, meteorologists can provide a skilled forecast only three to 10 days into the future. After that, the computer typically becomes a worse predictor than the historical average.

Many meteorologists believe this limitation won’t be significantly improved on anytime soon. The problem, they say, stems from proverbial seabirds (or butterflies, depending on the story’s telling). In 1961, mathematician and meteorologist Edward Lorenz discovered that small changes in the initial conditions of a weather system, when played out over a long enough period of time, can yield wildly different outcomes. In a presentation two years later, Lorenz quoted a fellow meteorologist who had remarked that if this chaos theory were true, then “one flap of a seagull’s wings would be enough to alter the course of the weather forever.”

More than five decades later, those flappy birds still cause headaches for weather forecasters. The further ahead meteorologists look into the future, the more their predictions become cluttered by small uncertainties that compound over time, such as imprecise measurements, small-scale atmospheric phenomena and faraway events.

So for now, the most likely place to make improvements is in fine-tuning short-term predictions, before chaos has a chance to kick in. This line of research, called precision forecasting, aims to provide speedier and more detailed visions of the near future. But first researchers need enough computer muscle to pull it off.

Crunching the numbers

Even if forecasters had omnipotent knowledge of meteorological conditions and mathematical equations that perfectly mimicked the atmosphere’s behavior, it would do no good if the computers that crunch the numbers weren’t up to snuff.

“Scientists are generally not limited by their imaginations, but by the computing power that’s available,” says computer scientist Mark Govett of NOAA’s Earth System Research Laboratory.

For a forecast system to work in the real world, it needs to be fast. The computer should be able to work through the data assimilation and weather simulations in only one one-hundredth of the time the forecast looks ahead. If a meteorologist wants to forecast the weather an hour ahead, for example, the computations should be finished in less than 36 seconds. This time limitation means meteorologists often have to simplify their simulations, trading accuracy for speed. “If it takes two days to forecast tomorrow’s weather, that’s no use to anyone,” says computational engineer Si Liu of the Texas Advanced Computing Center in Austin.

To optimize computation times, meteorologists split the weather simulations into smaller chunks. Since January, NOAA’s Global Forecast System has been breaking down the entire planet’s weather into a mesh of 13-kilometer-wide sections. NOAA’s Rapid Refresh model recently began using an even tighter grid, each cell just 3 kilometers wide, around North America. Govett says researchers plan to extend that resolution globally, breaking Earth’s surface into roughly 53 million individual pieces, each topped with 100 or more slices of atmosphere stacked roughly 12 kilometers up into the sky. In total, that’s more than 5.3 billion individual subsimulations that the system needs to handle.

That scale poses a major challenge, Liu said in January at the American Meteorological Society meeting in Phoenix. Weather simulations analyze each grid point separately, but changes in one section can affect its neighbors as well. This means that while different computer processors can each handle their own section, they have to quickly communicate information back and forth with other processors. Crowdsourced computing, which has proven useful for other big data challenges such as the extraterrestrial-hunting SETI@home project, is impractical for weather forecasting because too much time is lost transmitting between individual computers.

Instead, computer scientists now increasingly rely on chips called graphic processing units, or GPUs, that are specifically built for parallel processing. Traditional processors, CPUs, run their calculations in a set order as quickly as possible. GPUs, on the other hand, execute calculations out of sequence to save time. The result is that GPUs can power through computationally taxing weather calculations in as little as one 20th of the time it takes their CPU counterparts. This efficiency allows forecasters to incorporate more grid points into their simulations and churn out higher-resolution weather forecasts in the same amount of time.

For their next speed boost, computer scientists are looking to video games. GPUs have improved astronomically during recent years thanks to their popularity in gaming consoles and high-end computers, Govett says. “We’re looking around at what other people are using and asking, ‘Hey, can we use that?’ ”

The upgrade in computational power will be the backbone of precision forecasting, Govett predicts. In January, the National Weather Service announced that it will acquire two new GPU-boostable supercomputers and put them into operation by October. These new machines will be 10 times as fast as their predecessors, each capable of powering through 2,500 trillion operations each second.

“The excuse that we don’t have the computer power will be gone,” says atmospheric scientist Clifford Mass of the University of Washington in Seattle. “A renaissance in U.S. weather forecasting is possible, but now we need to improve other areas of our numerical weather predictions.”

There’s an app for that

Weather models are only as good as the data put in, and large swaths of the United States lack reliable weather monitoring. An imperfect picture of current conditions makes small-scale forecasts impossible. Mass was searching for something to help fill in these weather data gaps. He needed a data deluge, and he found the answer in his pocket.

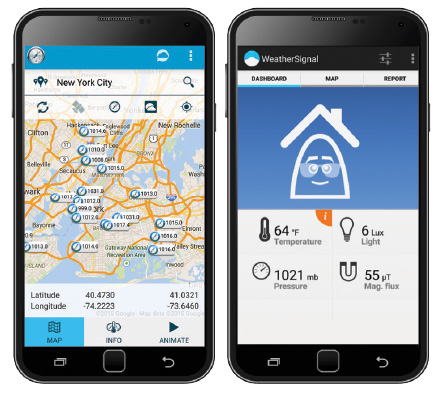

Mass realized that today’s smartphones are weather-sensing gizmos. Although typically designed for health and other monitoring uses, some of the latest smartphones contain electronic sensors that track atmospheric pressure, temperature, humidityand even ultraviolet light. Just one problem, though: Unlike weather stations, smartphones move around. Of all the sensors, only the barometer, which measures air pressure, isn’t affected by the constant movement from pocket to palm. Still, that’s good news for meteorologists, Mass says, because “pressure is the most valuable surface observation you can get.”

Air pressure differences drive the winds that carry cool, water-laden clouds across the sky. A sudden drop in pressure can signal that a brewing storm lurks nearby. Smartphones measure these pressure changes using a special chip that contains a miniature vacuum chamber covered by a thin metal skin. As the difference in air pressure between the two sides of the metal layer changes, the metal will bow, changing the flow of electric current passing through it.

Here’s where crowdsourcing makes a difference. Thanks to apps such as PressureNet and WeatherSignal, Mass now receives around 110,000 pressure readings each hour from Android smartphones across the country. That’s only a tiny fraction of the data out there, says PressureNet’s Jacob Sheehy. “There are probably 500 million or more Android devices with barometers in the world right now,” he says. “That’s a lot more than any other weather network has of anything by, well, about 500 million.”

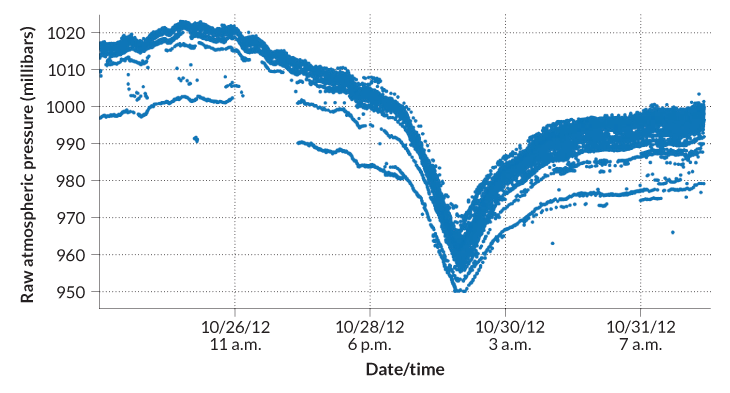

Sheehy recalls being “speechless for a few minutes” in October 2012 as thousands of incoming smartphone pressure readings plummeted in sequence along the East Coast as Hurricane Sandy rolled overhead. “It was the first time that I saw this whole idea was going to work,” he says. “Despite not having been designed for it, smartphones can provide surprisingly good weather measurements.”

Story continues after graph

While monitoring hurricanes showcases the network’s potential, ultimately the biggest boon will be for tracking more focused storms such as the rotating thunderstorms called supercells that spawn tornadoes. This atmospheric churning is too small to be spotted by long-range weather monitoring techniques such as radar until the storm has already developed. In a paper published in the Bulletin of the American Meteorological Society in September, Mass proposed that a dense network of smartphone barometers could spot the localized twisting of air that marks the early stages of a tornado-spawning supercell. With those data, forecasters could predict supercell formation. Mass is talking with Google about getting access to the colossal number of pressure readings already gathered by its Google Maps app, which Mass says would give him all the data he’d need to start tracking supercell formation in rural America.

“I’ve had a few simulations that look favorable,” he says, “but I don’t know yet what having a hundred million or more readings would do.”

Tracking twisters

Foretelling the formation of swirling supercells and their tornado offspring will probably be one of the most important benefits of precision forecasting. Twisters often strike with little warning, flinging cars and razing buildings. In one devastating example, during just four days in April 2011, U.S. tornadoes killed 321 people and caused more than $10 billion in damage.

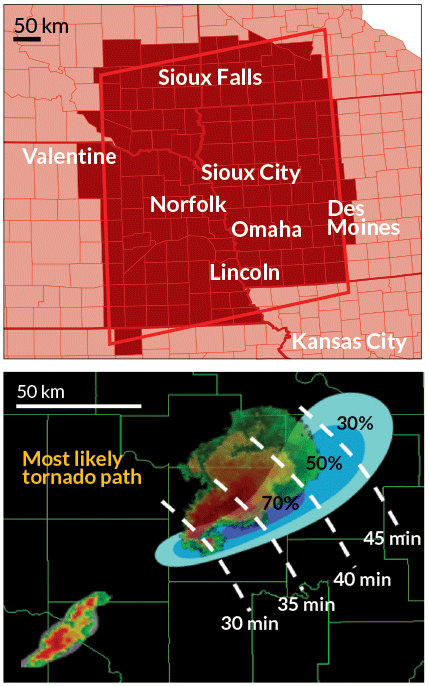

Today’s forecasters issue tornado warnings on average 14 minutes before a twister touches down. While this is enough time for a typical family to take shelter, crowded facilities such as hospitals, assisted-living centers and schools often require more warning to get everyone to safety. To buy people more time, researchers at NOAA’s National Severe Storms Laboratory are developing a system called Warn-on-Forecast. It collects up-to-the-minute weather data and uses supercomputers to run gridded air circulation simulations every hour, each time looking only a few hours ahead. The ultimate goal, the researchers say, is to provide tornado warnings an hour or more in advance.

“You could potentially release warnings of tornadoes and other severe storms before the storms even develop,” says atmospheric scientist Paul Markowski of Penn State.

However, tornado formation is finicky, says NOAA’s Rothfusz, who works on the project. Measurements of the atmosphere always have some degree of uncertainty, and the difference between a tornado forming or not can often be lost in the margin of error. So instead of running a weather simulation once to see the future, the Warn-on-Forecast researchers run an ensemble forecast of simulations, each with a slightly tweaked starting state. If, for example, 25 out of 50 simulations form a tornado, the estimated tornado risk is 50 percent.

While the current system produces a single alert for enormous areas that often encompass entire states, Warn-on-Forecast will calculate fine-scale risk assessments for county-sized zones using a grid of cells, each 250 meters wide.

Since the researchers envisioned Warn-on-Forecast in a 2009 paper in the Bulletin of the American Meteorological Society, they have seen significantly improved warning times for tornadoes in tests, Rothfusz says. The NOAA researchers have been testing the system using historical events, such as the massive April 27, 2011, outbreak that spawned 122 twisters across the southeastern United States. In those simulations, the team has foreseen supercell formation as much as one to two hours in advance. “There was a lot of skepticism initially, but as we got into it … we started to see some tremendous benefit,” Rothfusz says. “This looks feasible.”

As Warn-on-Forecast draws closer to implementation, a growing concern is how the public will use those extra 40-plus minutes between warning and tornado, says Kim Klockow, a University Corporation for Atmospheric Research meteorologist and behavioral scientist in Silver Spring, Md. In a 2009 survey, when respondents were asked what they would do with the longer lead times offered by Warn-on-Forecast, their answers deviated from the typical approach of sheltering immediately. Instead, respondents said they would try to flee the area.

Bad idea, Klockow says. Cars offer no protection from even weak tornadoes. No matter how advanced the warning, sheltering in a sturdy building remains the safest option, she says. Researchers will need to figure out how best to communicate the risk projections to the public.

As technology transforms how meteorologists predict the weather, both the extreme and the everyday, citizens and city officials should get a better handle on when storms will strike and where snow will fall.

“Over the last few years, we’ve seen incremental improvements in the precision of weather forecasting,” Mass says. “But that’s not what we want. We’re looking for a forecasting revolution, and it seems like one might not be too far off.“

This article appeared in the May 2, 2015, issue of Science News under the headline, “The Future of Forecasting.”