When SMART-1, the European Space Agency’s first mission to the moon, launched in September 2003, astronomers hailed it as the testing ground for a revolutionary and efficient solar-electric-propulsion technology. While this technological leap absorbed the attention of scientists and the news media, a second, quieter revolution aboard SMART-1 went almost unheralded. Only a small band of mathematicians and engineers appreciated the quantum leap forward for digital communications. Incorporated into SMART-1’s computers was a system for encoding data transmissions that, in a precise mathematical sense, is practically perfect.

Space engineers have long grappled with the problem of how to reliably transmit data, such as pictures and scientific measurements, from space probes back to Earth. How can messages travel hundreds of millions of miles without the data becoming hopelessly garbled by noise? In a less extreme situation, as any cell phone user can attest, noise is also an issue for communication systems on Earth.

Over the past half-century, mathematicians and computer scientists have come up with clever approaches to the noise problem. They’ve devised codes that intentionally incorporate redundancy into a message, so that even if noise corrupts some portions, the recipient can usually figure it out. Called error-correcting codes, these underlie a host of systems for digital communication and data storage, including cell phones, the Internet, and compact disks. Space missions use this technique to send messages from transmitters that are often no more powerful than a dim light bulb.

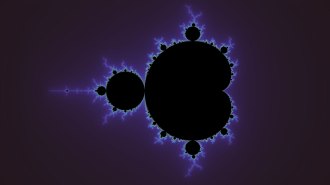

Yet coding theorists have been aware that their codes fall far short of what can, in theory, be achieved. In 1948, mathematician Claude Shannon, then at Bell Telephone Laboratories in Murray Hill, N.J., published a landmark paper in which he set a specific goal for coding theorists. Shannon showed that at any given noise level, there is an upper limit on the ratio of the information to the redundancy required for accurate transmission. As with the speed of light in physics, this limit is unattainable, but—in theory at least—highly efficient codes can come arbitrarily close.

The trouble was that no one could figure out how to construct the superefficient codes that Shannon’s theory had predicted. By the early 1990s, state-of-the-art codes were typically getting information across at only about half the rate that Shannon’s law said was possible.

Then, in the mid-1990s, an earthquake shook the coding-theory landscape. A pair of French engineers—outsiders to the world of coding theory—astonished the insiders with their invention of what they called turbo codes, which come within a hair’s breadth of Shannon’s limit.

Later in the decade, coding theorists realized that a long-forgotten method called low-density parity-check (LDPC) coding could edge even closer to the limit.

Turbo codes and LDPC codes are now coming into play. In addition to being aboard the SMART-1 mission, turbo-code technology is on its way to Mercury on NASA’s Messenger mission, launched last year. In the past couple of years, turbo codes have also found their way into millions of mobile phones, enabling users to send audio and video clips and to surf the Internet.

LDPC codes, meanwhile, have become the new standard for digital-satellite television. Hundreds of research groups are studying potential applications of the two kinds of codes at universities and industry giants including Sony, Motorola, Qualcomm, and Samsung.

“In the lab, we’re there” at the Shannon limit, says Robert McEliece, a coding theorist at the California Institute of Technology in Pasadena. “Slowly, the word is getting out, and the technology is being perfected.”

The closing of the gap between state-of-the-art codes and the Shannon limit could save space agencies tens of millions of dollars on every mission because they could use lighter data transmitters with smaller batteries and antennas. For cell phone and other wireless technologies, the new codes could reduce the number of dead spots in the world and lower the cost of service.

Noisy communication

Most engineering fields start with inventions; researchers then gradually figure out the limits of what can be achieved. Coding theory experienced the reverse. The field got launched by Shannon’s result, which used theoretical insights to place an absolute limit on what the clever coders of the future could achieve.

Shannon considered how much redundancy must be added to a message so that the information in it can survive a noisy transmission. One way to fight noise is simply to send the same message several times. For example, if a friend can’t hear what you’re saying at a noisy party, you might repeat yourself. However, it might be more effective to rephrase your message. Coding theorists look for effective ways to rephrase the information in a message to get it across using the smallest number of bits—zeros and ones.

For example, to protect a message against a single transmission error, one solution is to send the message three times. However, that requires three times as many bits as the original did. In 1950, Richard Hamming of Bell Laboratories came up with a much more efficient approach that adds only three redundant bits for every four bits of the original message.

In Hamming’s approach, each of the three added bits says something about a particular combination of bits in the original message. Given a four-bit message, bit number 5 is chosen in such a way that bits 1, 2, 3, and 5 will have an even number of ones. Bit 6 is chosen to make bits 1, 2, 4, and 6 have an even number of ones, and bit 7 is chosen to make bits 2, 3, 4, and 7 have an even number of ones. Chase through the possibilities, and you’ll find that if noise flips one of the seven transmitted bits from 0 to 1, or vice versa, even-odd checks reveal which bit is wrong.

Over the decades, coding theorists have come up with even more-efficient schemes and ones that can cope with a higher error rate. Shannon’s law, however, says that there is a limit to how good these codes can get—whether the communication channel is a fiber-optic cable or a noisy room. Try to send more information and less redundancy than the channel can support, and the message won’t survive the channel’s noise.

“Shannon showed that even with infinite computing power, infinitely bright engineers, and no constraints on your budget, there is this absolute limit on what you can achieve,” McEliece says.

Shannon’s law has a bright side, however. He proved that almost every code whose efficiency rate is below the Shannon limit would permit the recipient to recover the original message almost perfectly.

But there’s a catch. Shannon didn’t provide a practical recipe for constructing reliable codes that come close to the Shannon limit. In the decades that followed, researchers tried but failed to develop such codes. Coding theorists became fond of saying that almost all codes are good—except the ones they know about.

Turbo boost

In 1993 at the IEEE International Conference on Communications in Geneva, a pair of French engineers made an incredible claim. They reported that they had come up with a coding method that could provide almost perfect reliability at a rate breathtakingly close to the Shannon limit. These “turbo” codes, the researchers asserted, could transmit data about twice as fast as other codes do or, alternatively, could use only half as much transmitting power to achieve the same rates as other codes do.

“Their simulation curves claimed unbelievable performance, way beyond what was thought possible,” McEliece says.

Many experts at the conference scoffed at the claims and didn’t bother attending the engineers’ talk. “They said we must have made an error in our simulations,” recalls Claude Berrou of the French National School of Telecommunications in Brest, who invented turbo codes together with his colleague the late Alain Glavieux.

Within a year, however, coding theorists were reproducing the French engineers’ results. “Suddenly, the whole world of coding theory started twinkling and scintillating with the news,” says Michael Tanner, a coding theorist at the University of Illinois at Chicago. “Turbo codes changed the whole problem.”

“The thing that blew everyone away about turbo codes is not just that they get so close to Shannon capacity but that they’re so easy. How could we have overlooked them?” McEliece says. “I think it was a case of fools rushing in where angels fear to tread. Berrou and Glavieux didn’t know the problem was supposed to be hard, so they managed to find a new way to go about it.”

Turbo codes use a divide-and-conquer approach in which each of two decoders gets a different encoded version of the original message and the decoders then collaborate. Berrou likens the code to a crossword puzzle in which one would-be solver receives the “across” clues and another receives the “down” clues.

In the first round, each decoder uses its own clues to guess the bits of the original message, keeping track of how confident it is about each guess. Next, the two decoders compare notes and each updates its guesses and confidence levels. In the analogy with crosswords, if you guessed that an across word was wolverine, and a friend told you that a down clue also put a w in the first square, your confidence in your guess would grow. However, if you learned that the down clue put, say, a p in the first square, you would feel less confident about wolverine or possibly switch your guess to another word.

After the two decoders update their proposed solutions, they compare notes again and then update their solutions again. They continue this process until they reach a consensus about the original message. This typically takes 4 to 10 passes of information back and forth.

Rather than give two decoders partial information, it might seem more efficient to send all the clues to a single decoder, in closer analogy with how crosswords are usually solved. In principle, this would result in more-accurate decoding of the message than the two turbo decoders could produce. In practice, however, as the number of clues increases, the number of computations needed in the decoding process grows exponentially, so sending all the clues to one decoder would result in too slow a decoding process.

In hindsight, McEliece says, coding theory before 1993 was overly focused on the optimal, but inefficient, decoding that a single decoder offers.

“The genius of turbo codes is that Berrou and Glavieux said, ‘Let’s not talk about the best possible decision but about a good-enough decision,'” McEliece says. “That’s a much less complex problem, and they got it to work.”

Parity regained

Although turbo codes quickly became established as the fastest codes around, they soon had to share that title. Within a few years, coding theorists realized that a slightly modified version of an old, obscure code could sometimes produce even better results.

In 1958, Robert Gallager, then a Ph.D. student at the Massachusetts Institute of Technology (MIT), created a class of codes that, like Hamming’s codes, add redundant bits that permit decoders to do even-odd checks to guess which bits of the original message have been corrupted by noise. As with turbo codes, Gallager’s LDPC codes use cross talk between decoders to gradually establish confidence about the likely bits of the original message. However, in contrast to turbo codes, which use just two decoders, LDPC codes use a separate decoder for each bit of the message, creating a giant rumor mill. That requires thousands, or even tens of thousands, of decoders.

“It’s like turbo codes balkanized to the nth degree,” McEliece says.

Gallager’s simulations showed that LDPC codes come very close to the Shannon limit. However, his method was impractical at the time because it presented a computational problem far too complex for the computers of the day.

“The very biggest computer at MIT in the early sixties had an amount of computer power only about equivalent to what you get in a cell phone today,” recalls Gallager, now an MIT professor. Because of this limitation, most coding theorists regarded LDPC codes as intriguing but quixotic—and promptly forgot about them.

LDPC codes are “a piece of 21st–century coding that happened to fall in the 20th century,” says David Forney, a coding theorist at MIT. In the 1960s, he worked at Codex Corp. in Newton, Mass., a Motorola subsidiary that held the patents to LDPC codes. “I’m embarrassed to say we never once seriously considered using those patents,” he admits.

In the mid–1990s, however, with the coding-theory world buzzing about turbo codes’ cross talk approach, explorations of several research groups independently led them back to Gallager’s work.

“Gallager’s paper was almost clairvoyant,” McEliece says. “Every important concept about LDPC codes is buried in there, except one.”

That one concept is irregularity, in which there are more even-odd checks on certain bits of the original message than on others. For a crossword puzzle, this would be like having a particular letter of the grid be defined not just by across and down clues but also by a diagonal clue.

The extra clues of irregular LDPC codes permit the decoders to guess the value of certain bits with an extremely high confidence level. The decoders can then use these high-confidence bits to become more confident about the other bits of the message, creating a “ripple effect,” says Amin Shokrollahi of the Federal Polytechnic School of Lausanne in Switzerland, one of the coding theorists who pioneered the concept of irregularity. With this innovation, LDPC codes sometimes edge out turbo codes in the race toward the Shannon limit.

The ideas behind turbo codes and LDPC codes have rendered much of the preceding 50 years of coding theory obsolete, says David MacKay of Cambridge University in England, one of the coding theorists who rediscovered LDPC codes. “Future generations won’t have to learn any of the stuff that has been the standard in textbooks,” he says.

To some coding theorists, turbo codes and LDPC codes represent the end of coding theory. In the lab, they’ve reached within 5 percent of the maximum efficiency, and commercial systems are within 10 percent, says McEliece.

“We’re close enough to the Shannon limit that from now on, the improvements will only be incremental,” says Thomas Richardson, a coding theorist at Flarion Technologies in Bedminster, N.J. “This was probably the last big leap in coding.”

In 1,000 years, McEliece whimsically suggests, the Encyclopedia Galactica will sweep past most of the past half-century of coding theory to say simply, “Claude Shannon formulated the notion of channel capacity in 1948 A.D. Within several decades, mathematicians and engineers had devised practical ways to communicate reliably at data rates within 1 percent of the Shannon limit….”