Computer algorithms are better than people at forecasting which criminals are likely to commit future crimes — at least in some situations, a new study finds.

Rattankun Thongbun/iStock/Getty Images Plus

Computer algorithms can outperform people at predicting which criminals will get arrested again, a new study finds.

Risk-assessment algorithms that forecast future crimes often help judges and parole boards decide who stays behind bars (SN: 9/6/17). But these systems have come under fire for exhibiting racial biases (SN: 3/8/17), and some research has given reason to doubt that algorithms are any better at predicting arrests than humans are. One 2018 study that pitted human volunteers against the risk-assessment tool COMPAS found that people predicted criminal reoffence about as well as the software (SN: 2/20/18).

The new set of experiments confirms that humans predict repeat offenders about as well as algorithms when the people are given immediate feedback on the accuracy of their predications and when they are shown limited information about each criminal. But people are worse than computers when individuals don’t get feedback, or if they are shown more detailed criminal profiles.

In reality, judges and parole boards don’t get instant feedback either, and they usually have a lot of information to work with in making their decisions. So the study’s findings suggest that, under realistic prediction conditions, algorithms outmatch people at forecasting recidivism, researchers report online February 14 in Science Advances.

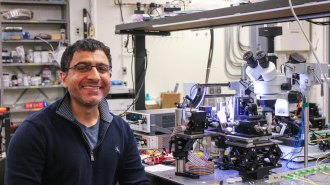

Computational social scientist Sharad Goel of Stanford University and colleagues started by mimicking the setup of the 2018 study. Online volunteers read short descriptions of 50 criminals — including features like sex, age and number of past arrests — and guessed whether each person was likely to be arrested for another crime within two years. After each round, volunteers were told whether they guessed correctly. As seen in 2018, people rivaled COMPAS’s performance: accurate about 65 percent of the time.

But in a slightly different version of this human vs. computer competition, Goel’s team found that COMPAS had an edge over people who did not receive feedback. In this experiment, participants had to predict which of 50 criminals would be arrested for violentcrimes, rather than just any crime.

With feedback, humans performed this task with 83 percent accuracy — close to COMPAS’ 89 percent. But without feedback, human accuracy fell to about 60 percent. That’s because people overestimated the risk of criminals committing violent crimes, despite being told that only 11 percent of the criminals in the dataset fell into this camp, the researchers say. The study did not investigate whether factors such as racial or economic biases contributed to that trend.

In a third variation of the experiment, risk-assessment algorithms showed an upper hand when given more detailed criminal profiles. This time, volunteers faced off against a risk-assessment tool dubbed LSI-R. That software could consider 10 more risk factors than COMPAS, including substance abuse, level of education and employment status. LSI-R and human volunteers rated criminals on a scale from very unlikely to very likely to reoffend.

When shown criminal profiles that included only a few risk factors, volunteers performed on par with LSI-R. But when shown more detailed criminal descriptions, LSI-R won out. The criminals with highest risk of getting arrested again, as ranked by people, included 57 percent of actual repeat offenders, whereas LSI-R’s list of most probable arrestees contained about 62 percent of actual reoffenders in the pool. In a similar task that involved predicting which criminals would not only get arrested, but re-incarcerated, humans’ highest-risk list contained 58 percent of actual reoffenders, compared with LSI-R’s 74 percent.

Computer scientist Hany Farid of the University of California, Berkeley, who worked on the 2018 study, is not surprised that algorithms eked out an advantage when volunteers didn’t get feedback and had more information to juggle. But just because algorithms outmatch untrained volunteers doesn’t mean their forecasts should automatically be trusted to make criminal justice decisions, he says.

Eighty percent accuracy might sound good, Farid says, but “you’ve got to ask yourself, if you’re wrong 20 percent of the time, are you willing to tolerate that?”

Since neither humans nor algorithms show amazing accuracy at predicting whether someone will commit a crime two years down the line, “should we be using [those forecasts] as a metric to determine whether somebody goes free?” Farid says. “My argument is no.”

Perhaps other questions, like how likely someone is to get a job or jump bail, should factor more heavily into criminal justice decisions, he suggests.