From Perry Mason to Law & Order, legal dramas have proved among the most predictably popular series on American television. In such shows, a defendant’s guilt or innocence typically comes to light only after expert witnesses testify before a jury, justifying—or challenging—theories about how a defendant could have perpetrated the crime.

Expert witnesses often play pivotal roles in movie courtrooms as well. Take the 1992 film My Cousin Vinny, where the fate of two young men wrongfully accused of murder hinges on dueling testimonies over tires and tire tracks by an FBI expert and the defense attorney’s auto-mechanic girlfriend.

Much of what people know—or think they know—about U.S. jurisprudence traces to such shows about criminal cases. What few nonlawyers realize is that these shows aren’t especially good models of cases involving torts-noncriminal suits where plaintiffs claim harm from a company’s products or activities. In these cases, judges frequently bar from the courtroom at least some scientific experts and the data on which they might have testified (SN: 10/8/05, p. 232).

These judges are responding to a 1993 order by the Supreme Court to screen potential junk science from U.S. trials. That instruction appears in the court’s opinion for a tort case known as Daubert (for Daubert v. Merrell Dow Pharmaceuticals).

As judges have struggled to comply over the past 15 years, many have relied on guidance offered early on by Alex Kozinski, a judge with the U.S. 9th Circuit Court of Appeals. He weighed in after the Supreme Court handed Daubert back to be decided by his court.

While acknowledging that “we [judges] are largely untrained in science and certainly no match for any of the witnesses whose testimony we are reviewing,” Kozinski said that it’s the judge’s responsibility to determine whether proposed expert testimony “constitutes ‘good science.”’

Judge Kozinski, like the Supreme Court in Daubert, attempted to divide knowledge that’s available to the courts into two general categories—good and iffy science, explains Sheila Jasanoff, who heads science and technology studies at Harvard University’s John F. Kennedy School of Government in Cambridge, Mass. Potentially good research, she says, would include “pure, unbiased, prelitigation science”—studies performed using accredited methods, validated by peer review, and replicated to ensure reliability. In the opposing camp: “impure, party-driven, potentially biased, ‘litigation science,'” characterized by an absence of replication or peer review.

Kozinski advised fellow jurists that a “very significant” consideration when evaluating the admissibility of experts should be whether their testimony would reflect analyses or data developed in the course of independent research versus those produced “expressly” for use in a trial.

The latter has come to be known as litigation science.

“We’ve always tried to take on issues of how science is used in policy—and litigation science is a very important one,” says David Michaels, who directs the Project on Scientific Knowledge and Public Policy (SKAPP) at the George Washington University School of Public Health and Health Services in Washington, D.C. So, SKAPP recruited a group of scientists, philosophers, and legal scholars to independently examine strengths and weaknesses of litigation science.

At a 2006 SKAAP conference—and now in a series of new papers and mini-monographs—the speakers conclude that excluding litigation science from tort cases risks significantly hindering the pursuit of justice.

Before the conference, Michaels admits, “I was very skeptical” about the quality of litigation science, generally, and its overall value to the courts. But the presentations changed his mind. “They made me recognize there’s no way to justify a blanket prohibition against using those studies,” he says.

Bias—deliberate or inadvertent—can permeate even research conducted solely for the advancement of general knowledge, the speakers showed. Their collective take-home message: Courts should learn to cope with bias and scientific uncertainty rather than attempting to reflexively run from them.

Litigation science

Suppose people suspect chemicals in their drinking water have sickened them. Several potentially toxic compounds are present, but the alleged polluters note that data have linked only one to potential health risks, and then only at doses well above those contained in the water.

The claimants—or plaintiffs—sue, and their attorneys hire researchers to quantify the pollutants in the water, identify their likely source, estimate who would have been exposed and for how long, and track down studies pointing to how one or more of the chemicals could have triggered the plaintiffs’ symptoms.

This is essentially what happened in Woburn, Mass., when families hired an attorney to sue companies suspected of tainting local well water and triggering leukemia in the community’s children. That case served as the basis of Jonathan Harr’s courtroom drama, A Civil Action (Vintage Books, 1996).

Some Woburn data developed for that case were disallowed by the court, even pre-Daubert, for being too novel or speculative. In its Daubert decision, the Supreme Court advocated an even stronger gatekeeping role for judges, warning them to be especially skeptical of research that hadn’t been well validated or peer reviewed.

But much litigation science may have little chance for peer review “even if its methods are impeccable,” assert Leslie I. Boden and David Ozonoff of the Boston University School of Public Health. For one thing, they write, “the peer-review process may be too slow or cumbersome” to be completed before a judge rules on the admissibility of expert testimony.

Issues investigated for a tort may also be too narrow to interest the editors of a peer-reviewed publication, Boden and Ozonoff observe in a January 2008 review. In other cases, the innovative methods needed to answer questions raised in litigation “may fare badly in peer review, which rewards ‘inside the box’ thinking,” they argue in Environmental Health Perspectives (EHP).

For all its limitations, however, litigation science may be unavoidable, particularly if a tort case rests upon issues never investigated before, notes Jasanoff. Take the Dalkon Shield, she says: This intrauterine contraceptive device was linked to pelvic infections, infertility, and death in some of the women who used it during the early 1970s (SN: 4/5/75, p. 226).

“It turned out to have plastic lattice strings, which captured germs and led to infection,” explains Jasanoff. Although the shield had been tested for efficacy, the lack of federal oversight of medical devices at that time meant exhaustive safety tests hadn’t been required. And without that prompt, she asks, “Who in the world would have done research on the particular germ-capturing capabilities of a new material used in a new contraceptive?”

Especially post-Daubert, “It is in these one-off or stand-alone cases that the law’s technical fact-finding capacity is at its most vulnerable,” she concludes, because a plaintiff’s evidence may rely on the type of research that judges have been warned off. This “stacks the decks against the plaintiffs,” she notes, because if their evidence or experts are ruled inadmissible, it’s unlikely their case will survive.

Prelitigation science

The specter of lawsuits may even influence studies performed long before any litigation, such as a manufacturer’s safety tests, Boden observes. That’s why he and many other researchers consider such studies a facet of litigation science.

Indeed, he and Ozonoff contend, a manufacturer’s prelitigation studies are at least as vulnerable to bias as is the science triggered by a specific lawsuit. Many safety studies are financed by the companies that stand to benefit from selling the tested products. Although federal regulatory agencies have come to rely on such data, there’s also “a clear conflict of interest” in the firms’ findings, the Boston scientists contend.

Compared with drug-safety and -efficacy testing by researchers with no financial ties to the outcome, tests funded by drug manufacturers are more likely to report findings that favor the drug companies, according to studies cited in the EHP review. What’s more, Boden and Ozonoff report, the biomedical industry often delays the release of data from studies it funds, which could affect their availability to juries.

In the end, such prelawsuit defendant studies, which often face few hurdles to admissibility in tort cases, can “serve the same purpose and work in the same way”—supporting litigation—as plaintiff-funded, tort-triggered research does, Boden and Ozonoff maintain.

In fact, the real dichotomy should not be simply whether some research was triggered by litigation, but rather whether an investigation was guided by a search for truth or by advocacy, contends Susan Haack, a legal scholar at the University of Miami School of Law in Coral Gables. “And what you really need to worry about is the advocacy type,” Haack argued at the SKAPP conference.

Bias everywhere?

The courts’ general assumption that litigation science is inherently weaker or more biased than most research prompted by other interests reflects how little the judicial system understands science, says John C. Bailar, a biostatistician and scholar in residence at the National Academies of Science in Washington, D.C. At the SKAPP meeting and in a recent paper published in the European Journal of Oncology, he offers a laundry list of how researchers from the ostensibly “good” nonlitigation camp can and have defrauded colleagues and the public.

He gave examples of how researchers designed studies with limited statistical power (that is, divided subjects into such small groups that any adverse finding would lack statistical significance), a move by which they could “obtain a reliably negative result.” Or researchers might report they were hunting for cancers, but end their search long before the latency period for development of the disease was up. In other instances, scientists included people with low exposures to a toxicant along with those who are highly exposed, diluting the apparent potency of the compound under study.

Researchers—or their critics—can “disguise biases” in other ways, say toxicologist Ronald L. Melnick and his colleagues at the National Institute of Environmental Health Sciences in Research Triangle Park, N.C. For instance, researchers may expose animals in a nontypical way, such as by inhaling a carcinogen rather than eating it, so that the kidneys and livers don’t receive the full toxic whammy. Yet kidney and liver cancers might have been the diseases showing up in exposed workers, Melnick and colleagues point out in a January paper in EHP.

Another possibility is that tissues might be examined too long after death to avoid the natural and rapid destruction of cells, which “can interfere with the detection and diagnosis of chemically induced lesions,” Melnick’s team explains.

A subtler bias—the “conspiracy of optimism”—inadvertently creeps into much science, adds sociologist William R. Freudenburg of the University of California, Santa Barbara.

If people like the findings of a particular study—perhaps one showing that the risk of climate change has been greatly overstated—they tend to cite it frequently and accept its conclusions, Freudenburg explains. However, where results challenge what society hopes to be true—such as a study finding that global warming will likely be 10 times worse than previously anticipated—critics tend to pounce on the data, scrutinizing its every detail.

The result, he says, is that unwelcome findings tend to be excessively challenged while welcomed ones—and any flaws in their execution—may go uncontested.

In a gray world

A third grader sustains three broken ribs on his way home from school. Did those injuries come from being hit on Oct. 12 by a pickup truck licensed to a male farm worker?

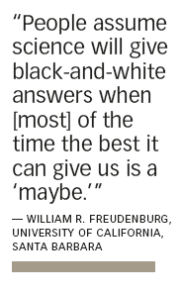

That’s a fairly black-and-white question, and “courts are good at dealing with a black-and-white world,” Freudenburg says. The rub: “We live in a world where uncertainty is a pervasive fact of life.”

Civil courts “aren’t well adapted to making decisions on uncertain sets of events,” he says—such as whether a rubber worker’s cancer traces to butadiene exposure on the job.

Unfortunately, “most people assume science will give black-and-white answers,” Freudenburg says, “when some 90-plus percent of the time the best it can give us is a ‘maybe.'” He says scientists must help the courts figure out what to do when maybe is the best answer science will ever be able to get.

In fact, this is a problem that even science and engineering struggles with, he notes. It boils down to statistics: How certain can we be that a fact is true? Scientists typically resist hyping the potential for risk—such as saying that workplace butadiene caused a worker’s cancer, Freudenburg says. Yet those researchers tend to forget that the better they become at avoiding a false positive, the more they risk reporting a false negative.

However, in litigation science, the price of a false negative may be catastrophic, Freudenburg notes: You might fail to compensate a family for a huge financial or physical injury caused by a workplace chemical.

On the other hand, a false positive—attributing injury to a workplace chemical when, in fact, environmental exposures around the home or some genetic predisposition to disease was responsible—can also be catastrophic. Such an error can result in the courts holding a company responsible for damages so costly that they bankrupt the business and put its employees out of work.

Improvements

Against this backdrop, Michaels says, it’s clear that courts “shouldn’t set rules, a priori, to say which studies are good or bad.” Policy makers, regulators, judges, and juries really need to consider all pertinent and available research, the SKAPP deliberations concluded.

Experts should be up front about potential conflicts of interest or biases in their backgrounds or in the material on which they will testify, Michaels says. Courts could then let the cross-examination process at trial ferret out weaknesses, flaws, or nuances in data and their interpretation. As a process, cross-examination offers a powerful and generally effective means to challenge data and their interpretation, he says. “Once a judge uses a Daubert challenge to throw out evidentiary studies—on either side—that process becomes flawed,” he argues.

Science too new, too narrow in focus, or too limited in application to interest refereed journals might enlist a newer entity to conduct peer review, says James W. Conrad, Jr., a Washington, D.C. attorney who participated in SKAPP’s proceedings. Various contract companies now offer quick-turnaround, peer-review services for data, reports, and research.

A bigger issue, Jasanoff says, may be a court’s rush to judgment, especially in the face of substantial uncertainty. She notes that in a few instances, progressive judges have argued that plaintiffs shouldn’t be penalized because nobody thought to study risks from which they’re now claiming injury. The jurists’ solution: order the defendants, such as a chemical company, to pay all or part of the cost of studies to evaluate those risks in the plaintiffs or in other people who encounter the same putative threat, such as exposure to a pollutant.

In the pursuit of justice, Jasanoff says, “perhaps we ought to also hold the statute of limitations in abeyance” so that a plaintiff’s right to sue doesn’t expire before the data are in.

“Litigation science can be a legitimate pathway to generating knowledge,” she concludes, and no longer deserves to be treated “as the ugly cousin” of conventional science.