Languages use different parts of brain

Different areas are active depending on grammar

The part of the brain that’s used to decode a sentence depends on the grammatical structure of the language it’s communicated in, a new study suggests.

Brain images showed that subtly different neural regions were activated when speakers of American Sign Language saw sentences that used two different kinds of grammar. The study, published online this week in Proceedings of the National Academy of Sciences, suggests neural structures that evolved for other cognitive tasks, like memory and analysis, may help humans flexibly use a variety of languages.

“We’re using and adapting the machinery we already have in our brains,” says study coauthor Aaron Newman of Dalhousie University in Halifax, Canada. “Obviously we’re doing something different [from other animals], because we’re able to learn language. But it’s not because some little black box evolved specially in our brain that does only language, and nothing else.”

Most spoken languages express relationships between the subject and object of a sentence — the “who did what to whom,” Newman says — in one of two ways. Some languages, like English, encode information in word order. “John gave flowers to Mary” means something different than “Mary gave flowers to John.” And “John flowers Mary to gave” doesn’t mean anything at all.

Other languages, like German or Russian, use “tags,” such as helping words or suffixes, that make words’ roles in the sentence clear. In German, for example, different forms of “the” carry information about who does what to whom. As long as words stay with their designated “the” they can be moved around German sentences much more flexibly than in English.

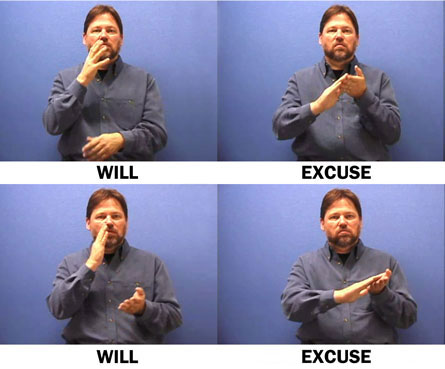

Like English, American Sign Language can convey meaning via the order in which signs appear. But altering the sign by, for instance, moving hands through space or signing on one side of the body, can add information like how often an action happens (“John gives flowers to Mary every day”) or how many objects there are (“John gave a dozen flowers to Mary”) without the need for extra words.

“In most spoken languages, if word order is a cue for who’s doing what to whom, it’s mandatory,” Newman says. But in ASL, which uses both word order and tags to encode grammar, “the tags are actually optional.”

The researchers showed 14 deaf signers who had learned ASL from birth a video of coauthor Ted Supalla, who is a professor of brain and cognitive sciences at the University of Rochester in New York and a native ASL signer, signing two versions of a set of sentences. One version used only word order to convey grammatical information, and the other added signed tags.

The sentences, which included “John’s grandmother feeds the monkey every morning” and “The prison warden says all juveniles will be pardoned tomorrow,” were carefully constructed to sound natural to fluent ASL signers and to mean the same thing regardless of which grammar structure they used.

Brain scans taken using functional MRI while participants watched the videos showed that overlapping but slightly different regions were activated by word-order sentences compared to word-tag sentences.

The regions that lit up only for word-order sentences are known to be involved with short-term memory. The regions activated by word tags are involved in procedural memory, the kind of memory that controls automatic tasks like riding a bicycle.

This could mean that listeners need to hold words in their short-term memory to understand word-order sentences, while processing word-tag sentences is more automatic, Newman says.

“It’s very elegant, probably nicer results than we could have hoped for,” he says.

“The story they’re telling makes sense to me,” says cognitive neuroscientist Karen Emmorey of San Diego State University, who also studies how the brain processes sign language. But, she adds, “it’ll be important to also look at spoken languages that differ in this property to see if these things they’re finding hold up.”