A few simple tricks make fake news stories stick in the brain

People are more likely to buy in if the misinformation is surprising, emotional or on repeat

Purveyors of deceitful messaging use a few key tricks that take advantage of the brain’s desire to believe.

E. Otwell

Bad information isn’t new. Propagandists and scam artists have been selling their brand of proverbial snake oil for ages, all to bend people’s thinking to their goals. What’s different today is that the digital world flings information faster and farther than ever before.

Our brains can’t always keep up.

That’s because we often rely on quick estimates to figure out whether something is true. These shortcuts, called heuristics, are often based on very simple patterns (SN: 9/20/14, p. 24). For instance, most information we come across in our daily lives is true. So when forced to guess, we often err on the side of believing.

Special report: Awash in deception

Other shortcuts exist that encourage information — true or false — to find its way into our minds, research on human psychology shows. We take notice of information that is new, that fires up our emotions, that supports what we already believe and that we hear over and over.

Most of the time, these shortcuts make us “super-efficient,” quickly leading us to the right answer, says cognitive psychologist Elizabeth Marsh of Duke University. But in fast-moving digital landscapes, those shortcuts are “going to get us in trouble,” she says.

How the various online platforms feed us information changes the game, as well. “We are not only contending with our own cognitive crutches as humans,” says Jevin West, a computational social scientist at the University of Washington in Seattle who cowrote the 2020 book Calling Bullshit: The Art of Skepticism in a Data-Driven World. “We’re also contending with a platform, and with algorithms and bots that know how to pierce into our cognitive frailties.” The goal, he says, is “to glue our eyeballs to those platforms.”

Here, scientists who study misinformation pull back the curtain on some false social media posts to show how bad information can creep into our minds.

Sharing what’s new

People take special notice of fresh information. “Novelty has an advantage in the information economy in terms of spreading farther, faster, deeper,” says information scientist Sinan Aral of MIT and author of the 2020 book The Hype Machine: How Social Media Disrupts Our Elections, Our Economy, and Our Health — And How We Must Adapt. Fresh intel can inform our beliefs, behaviors and predictions in powerful ways. In a study of Twitter behavior that spanned 10 years, Aral and his colleagues found more signs of surprise — an indicator that information was new — in people’s responses to false news stories than to true ones.

Sharing new tidbits can also provide a status boost, as any internet influencer knows. “We gain in status when we share novel information,” Aral says. “It makes us look like we’re in the know.”

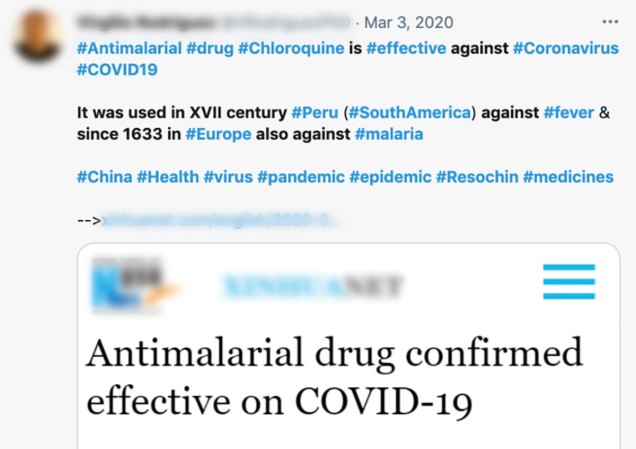

New information becomes even more alluring in times of uncertainty, West says. That played out early in the COVID-19 pandemic, when researchers and physicians were scrambling to find life-saving treatments. Unproven methods — vitamins, garlic and hydroxychloroquine, among others — got lots of attention. “There were not a lot of answers on how to treat COVID early in the pandemic,” he says.

Misinformation: Natural “cure”

Expert’s review

This tweet provides unequivocal, confident certainty with “cure” and it appeals to people’s beliefs [in natural remedies] with “pawpaw tree” and “garlic.” The author understands well these tricks. The consumer will want to believe this, and … be in the know by seeing it before their friends receive it as a share.

— Jevin West, University of Washington

Editor’s note: This box and those below show false statements found on social media or in the news. These examples, all misleading and untrue, show how misinformation can trick us.

Supports prior beliefs

Accepting information that’s consistent with what we already know to be true can feel like a safe bet. We tend to give that sort of message less scrutiny. “It’s more comfortable to find pieces of information that fit our narrative,” West says. “And when we are confronted with information that breaks that narrative, that’s incredibly uncomfortable.”

But this reliance on our stored knowledge can lead us astray. People are wrong about a lot of facts, easily confuse facts and opinions and claim to know facts that are impossible, as Marsh and her colleague Nadia Brashier of Harvard University wrote in 2020 in Annual Review of Psychology. And with so much information streaming in, it’s easy to find the material that fits with what you think you know. “To the extent that I want to believe X, I can go out there and find evidence for X,” Marsh says. “If I were an anti-vaxxer, it wouldn’t matter how many times you told me that vaccines are good, because it would be against my world identity,” she says.

Baseball legend and civil rights advocate Hank Aaron died on January 22 at age 86. Some people soon noted that he’d received a COVID-19 vaccine 17 days earlier. Anti-vaccine groups used his death to blame vaccines, with no evidence that the vaccine was involved. “It’s so opportunistic,” says global health researcher Tim Mackey of the University of California, San Diego.

Misinformation: Sports legend

Expert’s review

This one is very emotive. Most everyone knows Hank Aaron. [The post is] playing on [a] reaction of shock over his death (novel info) and then introducing misinformation that leads people to believe he died from the COVID-19 vaccine. The use of the [CBS Sports] link is compelling as it’s from a trusted news source, even though the link says nothing about Aaron dying from the vaccine. It almost makes him an unknowing martyr for the anti-vax movement. Aaron said he wanted to encourage vaccine uptake among African American people. His untimely death is instead used strategically to target this population.

— Tim Mackey, UC San Diego

Tugs on emotions

Playing on emotions is the “dirtiest, easiest trick,” West says.

Outrage, fear and disgust can capture a reader’s attention. That’s what turned up in Aral’s analyses of over 126,000 instances of rumors spreading through tweets, reported in 2018 in Science. False rumors were more likely to inspire disgust than true information, the researchers found.

“False news is shocking, surprising, blood-boiling, anger-inducing,” Aral says. “That shock and awe combines with novelty to really get false news spreading at a much faster rate than true news.” The presence of emotional language increases the spread of social media messages by about 20 percent for each emotion-triggering word, researchers at New York University reported in Proceedings of the National Academy of Sciences in 2017.

Along with message content, readers’ emotions matter, too. People who rely on emotions to assess a news story are more likely to be duped by fake news, misinformation scientist Cameron Martel of MIT and colleagues reported in 2020 in Cognitive Research: Principles and Implications.

Misinformation: Emotions

Expert’s review

[In studies], this headline was particularly evocative of both anger and anxiety, even relative to other false news headlines. I would guess that this has to do with the fact that the headline is tapping into both a common myth about (false) vaccine dangers, and focuses on potential harm to a vulnerable population (children).

— Cameron Martel, MIT

On repeat

Even the most outlandish idea begins to sound less wild the 10th time we hear it. That’s been the case since long before the internet existed. In a 1945 study, people were more inclined to believe rumors about wartime rationing that they had heard before rather than unfamiliar ones.

Many recent studies have found similar effects for repetition, a phenomenon sometimes called the “illusory truth effect.” Even when people know a statement is false, hearing it again and again gives it more weight, Marsh says. “Keep it simple. Say it over and over.”

There’s lots of repetition to be found on Twitter, where hashtags can draw many people into a conversation, Mackey says. On July 27, 2020, then-President Donald Trump tweeted a link to a video of a doctor making false claims that hydroxychloroquine can cure COVID-19. Similar tweets exploded soon after, jumping from an average of about 29,000 daily tweets to over a million just a day later, Mackey and his colleagues reported in the Lancet Digital Health in February. “It just takes one piece of misinformation for people to run with,” Mackey says.

Misinformation: Repetition

Expert’s review

[The tweet about Gates and Fauci] was a pretty common theme. Repetition for this one is all about building an online campaign. Essentially propagation serves two roles: (1) getting [the fake message] out there that people who are against use of hydroxychloroquine are involved in a conspiracy to keep the treatment out of people’s hands; and (2) a campaign [to] force these public figures to actually get tested. — Tim Mackey, UC San Diego

Sign up for our newsletter

We summarize the week's scientific breakthroughs every Thursday.