Two thousand years ago, Roman Emperor Nero peered through an emerald monocle to better see his gladiators in combat. Twelve hundred or so years later, eyeglasses started to adorn faces. Up to the present, lenses have primarily served one purpose: to render the world more visible–to people, that is. Now, there are inanimate observers that can also benefit from lenses. More and more, computers are being tasked with making sense of the visual world in ways that people can’t.

With a new generation of optics, engineers are recasting visual scenes for computers’ consumption. To the human eye, these pictures are visual gibberish, hardly worth a single word, let alone a thousand. To computers, such data can be worth more words than you’d care to count.

Central to it all are new styles of lenses. Instead of the familiar concave and convex disks, optical engineers are making oddly shaped, radically different lenses tailored to the strengths of computers.

“Once you break away from thinking that the optics have to form something [people] recognize as an image, there are many things that you can do,” says Joseph N. Mait of the Army Research Laboratory in Adelphi, Md., and the National Defense University in Washington, D.C.

“There’s no reason to go ahead and form an image,” agrees Eustace L. Dereniak of the University of Arizona in Tucson. Even in nature, there are beetles that navigate by detecting certain colors or the polarization of light in space without making an image out of the data. People have been slow to explore such alternatives, however, because we’ve modeled optical instruments such as cameras after our own, image-making eyeballs, says Dereniak.

By weaning themselves away from conventional optics, some researchers are bestowing microscopes and other optical instruments with extraordinarily crisp focusing powers across their entire field of view–a characteristic known as extended depth of field. The lenses under development for these purposes point to many other promising prospects, optics developers say, including cameras no thicker than business cards and improved iris-scanning devices for detecting terrorists in airports.

Other optical engineers are developing novel lenses to help computers sense motion and the physical properties of remote objects.

Going beyond optical phenomena, engineers anticipate making similar lenses that can process other portions of the electromagnetic spectrum, says David J. Brady of Duke University in Durham, N.C. “It’s a general change in the way you think about sensing,” he says. Among the technologies that may be strengthened are radar, computerized axial tomography (CAT) X-ray scanners, and magnetic resonance imaging (MRI) systems.

Getting the point

Using computers to manipulate images is old hat. Anybody with a copy of Photoshop or other image-processing programs can do it routinely on his or her desktop. However, what’s new is the strategy of modifying images first to make them better suited for the computer mind.

When Edward R. Dowski Jr. arrived at the University of Colorado in Boulder as a Ph.D. candidate in 1990, he already was thinking along those lines. A radar engineer, Dowski was coming from a stint at the Japanese photography firm Konica.

For his dissertation topic, he decided to see what it would take to devise a new type of lens that would make autofocusing work better.

Conventional cameras, microscopes, and other optical instruments use sets of convex and concave lenses to focus light onto flat pieces of film or electronic detectors. An autofocus camera typically shifts the positions of some of those optical elements forward and backward until a sensor that monitors contrast differences in the field of view detects sufficient detail.

Dowski’s idea was to do away with that little dance by inserting an additional lens between the camera’s built-in set of lenses and the detector. It would generate a computer-readable pattern of light that indicated how far out of focus the camera’s subject was. The in-camera computer could then calculate how far to move the motor-driven lens.

The idea worked, and Dowski earned his Ph.D. But camera companies didn’t show any interest. Dowski’s graduate advisor, W. Thomas Cathey then realized that there might be more promise in doing just the opposite of what Dowski had done. He suggested this surprising turn of thought to Dowski, and they decided to give it a whirl.

Cathey and Dowski began by imagining any scene observed through a lens as a mosaic of tiny points of illumination. Ironically, to eliminate autofocusing systems, they devised a defocusing lens. Rather than having to rely on a movable lens to focus light, they came up with a saddle-shaped lens that stays put. It presents what appears to be a blurry image to a computer, which then runs an algorithm that can reconstruct the image point by point. The result is an image in sharp focus in both the foreground and background–that is, with great depth of field.

Cathey confesses that the extended depth of field, which he claims is at least 10 times greater than it is for conventional lenses, does have its tradeoffs. As the computer removes the overall blurring introduced by the ray-altering lens, it introduces a smattering of random errors, or noise, which may show up as subtle roughening of smooth surfaces. However, the improvement in overall focus far outweighs the effect of that misinformation, Cathey says. Moreover, additional computer processing can remove that noise, Dowski adds.

In 1996, Cathey and Dowski founded a company, CDM-Optics, to develop and commercialize products based on the new optical technique, which they call wavefront coding. Lately, their patience has been paying off.

Last year, for example, optics industry giant Carl Zeiss of Oberkochen, Germany, announced the first commercial products: new modules for microscopes that incorporate the CDM-developed technology. Olympus Optical of Tokyo, also is licensing the Boulder company’s technology for use in extended depth-of-field endoscopes, which are camera-equipped catheters that doctors use to look inside a patient’s body.

Similar, extended depth-of-field improvements will also benefit machine-vision systems, such as those for reading barcodes, sorting packages, and assembling and inspecting electronic circuits, Dowski predicts.

Another research team, led by Robert Plemmons of Wake Forest University in Winston-Salem, N.C., is collaborating with the National Security Agency and the Immigration and Naturalization Service on wavefront-coded, extended-depth-of-field cameras. The goal here is to develop systems that can successfully capture iris images at a greater range of distances than current identification technology can.

Because computers can also correct for common lens aberrations as they deblur, the wavefront-coding approach offers a way to slash the number of aberration-correcting optical elements found in typical cameras and other devices. “We think there is great potential to revolutionize the way you design lenses,” says Cathey.

Among the more exotic projects under development by CDM Optics’ engineers are designs for lightweight space telescopes with relatively forgiving construction tolerances. The goal of another highly speculative project is to restore visual acuity in elderly people even though their ocular lenses no longer focus adequately. The idea, says Dowski, is to use waveform-coding optics built into contact lenses–or perhaps even eventually carved into corneal tissue by a laser-not to render everyday scenes as recognizable images but as patterns that the brain could learn to decipher.

Different strokes

The saddlelike lens and other wavefront-coding lenses that Dowski and his colleagues have come up with represent only a few of the countless possible forms for such computer-oriented optical elements.

In Japan, for instance, Jun Tanida of Osaka University and his colleagues have been experimenting with arrays of tiny conventional lenses, known as lenslets. Each lenslet focuses a small, low-resolution image onto a portion of an electronic detector behind the array. By taking advantage of all of the lenslets’ different perspectives, a computer can then calculate a single large scene at roughly twice the resolution than would be possible if one conventional lens had been used.

A particular advantage of the Japanese approach is that the thin lenslet array can focus light onto a detector less than a paper’s thickness away. In collaboration with Minolta, Tanida and his colleagues have exploited this radical abbreviation of focal length to develop a prototype of a credit-card-thin camera. Normal camera focal lengths range around a few centimeters.

Some other extremely thin cameras use tricks such as bouncing light off internal mirrors to attain the required focal length in a small package. Instead, the Japanese team devised “an insightful combination of optics and electronics to reduce the focal length of the system” without a loss in resolution, says Mait.

Mait dubs this emerging field of optics “integrated computational imaging.” A possible technological outcome of the field is wraparound cameras integrated into the skins of robotic airplanes or other military vehicles, he suggests.

Also experimenting with multiple perspectives culled by one sensor, Duke’s Brady and his colleagues have invented a matchbox-size plastic block riddled with precisely angled holes so that photodetectors behind the block receive light from a scene simultaneously from different viewpoints.

The result is a device that can reconstruct the motion of, say, a tank without capturing or analyzing any images of the vehicle. Currently, most motion-tracking devices capture images of a two-dimensional scene and then analyze pixel patterns in search of changes indicating motion. It’s a slow, computer-intensive process and prone to error, Brady notes.

With the new hole-riddled device, light from a tank or other object reaches detectors as a distinctive optical code from which a computer can quickly reconstruct motion with minimal computations. Brady says that he got the idea for the device as he drove through the forests around Durham and observed the shifts in position of lighted houses deep in the woods with respect to the foreground trees.

The U.S. military, which funds some of Brady’s work through the Defense Advanced Research Projects Agency, is seeking cheap, fast motion sensors for surveillance.

“What the military wants to see are mobile threats,” such as mobile missile launchers, says Mait.

Such sensors might also bestow a new kind of spatial awareness on robots and computers that would help them to interact with people more naturally, says Brady, who plans to describe the devices at an optics and lasers meeting in Baltimore in June.

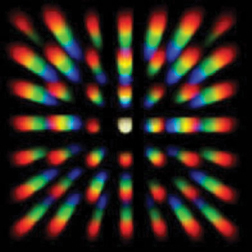

Developing yet another type of computer eyewear, Dereniak, Michael R. Descour, also at the University of Arizona, and their colleagues have created an optical element for simultaneously recording light spectra across an entire scene. Such spectral information may reveal camouflaged weapons in a satellite image or, with the help of fluorescent labels that attach to specific cellular structures, biological behavior under a microscope.

The spectra-capturing lens yields a pattern in which a 30-color spectrum associated with each point in a scene is mapped onto an electronic detector. It’s “not an image at that [stage], just a scrambled mess,” Dereniak says.

However, a computer summoning a type of processing that’s standard in radiology procedures such as CAT scans can transform this seeming visual cacophony into an image of the scene at any specific color, he adds. Such multiwavelength data are one of the primary means by which scientists analyze the physical and chemical properties of subjects ranging from atoms to landscapes.

Having spent several years already devising their visible-light system, the Arizona team is now developing versions that work at infrared wavelengths for military surveillance and at ultraviolet frequencies for studying fluorescently tagged biological samples.

All together now

Earlier attempts to use lenses to go beyond mere imaging weren’t fruitful. In the 1960s, for instance, the military tried to develop so-called optical correlators that could detect threats by optically comparing reconnaissance images with patterns of enemy vehicles stored holographically.

“When digital processing was in its infancy, the most elegant way to process the information was optically,” notes Mait. Yet the approach failed because “optics doesn’t provide the kind of accuracy that is needed for detecting threats in complex and cluttered military scenes.”

Technology has changed dramatically since then. Most obviously, the data-crunching capabilities of electronic devices have soared. But there have also been major strides in mathematical analytical tools and advances in optics fabrication that permit more complex lenses to be made–like wavefront coding lenses and Brady’s blocks pierced by light pipes.

Now, says Mait, all the tools are in place to unlock a world of possibilities that have long been hidden to the human eye.

****************

If you have a comment on this article that you would like considered for publication in Science News, send it to editors@sciencenews.org. Please include your name and location.

To subscribe to Science News (print), go to https://www.kable.com/pub/scnw/

subServices.asp.

To sign up for the free weekly e-LETTER from Science News, go to http://www.sciencenews.org/subscribe_form.asp.